What is PoplarML?

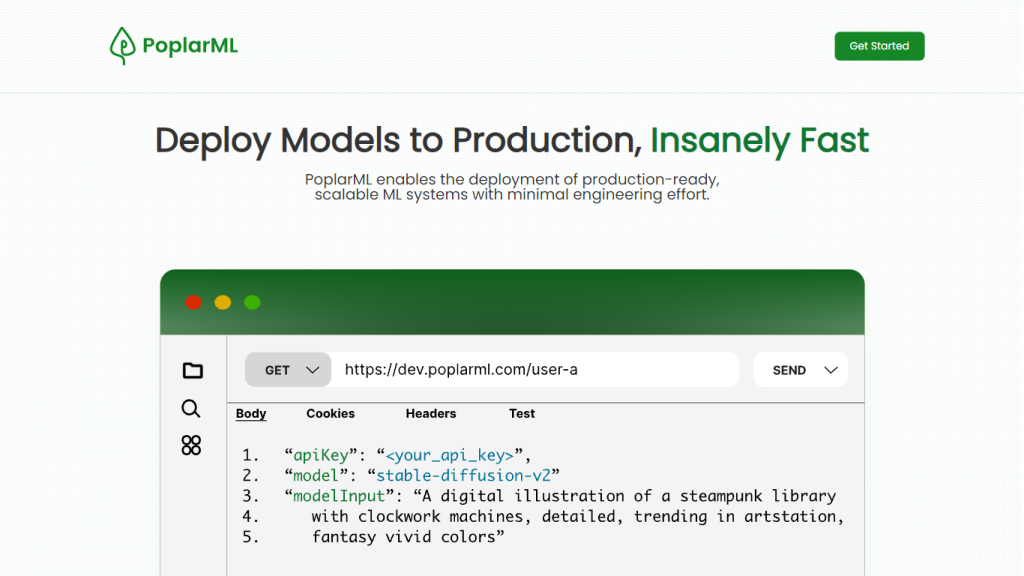

PoplarML is a new AI tool for fast and seamless deployment of production-ready, scalable machine learning (ML) systems with a minimum of engineering effort. It does one-click deployment and real-time invocation for inference by models through REST API endpoints. PoplarML is agnostic to any framework and thus functions with many ML frameworks, such as TensorFlow, PyTorch, and Jax.

PoplarML Key Features & Benefits

Production-Ready Deployment: PoplarML provides deployment of machine learning models that are aimed at production.

Real-Time Inference: PoplarML can make real-time inferences through REST API endpoints, providing instant results.

Framework Agnostic: PoplarML serves as a means for deploying ML models for most ML frameworks, which in general include TensorFlow, PyTorch, and Jax. For this reason, the model deployment is eliminated by any of those frameworks.

Scalability: It ensures scalability of the deployed ML systems when the demand increases.

One-Click Deployment: Users can deploy their models in a single click, reducing the time and effort needed for setup.

Data Preprocessing: PoplarML smoothens data preprocessing through techniques such as data cleaning, feature engineering, and normalization.

Model Selection and Training: The platform offers a huge library of ML algorithms and frameworks for users’ choice.

Model Evaluation and Interpretation: It enables one to evaluate their models on various user-defined metrics and visualize the outputs using interactive charts and graphs.

Seamless Integration: PoplarML seamlessly integrates with leading programming languages, namely Python, R, and leading ML frameworks—TensorFlow and PyTorch.

Use Cases and Applications of PoplarML

Owing to its flexibility, PoplarML finds applications across many use cases, which include:

- Deploying ML Models in Production: Easily deploy ML models to production.

- It enables the scaling up of ML systems for handling extensive datasets and running time-consuming tasks.

- Real-Time Inference Invocation: In this manner, it allows inferred inference in real time through REST API endpoints. Any industry or sector involved in data-driven decision-making can use the service.

How to Use PoplarML

The method for using PoplarML is plain and simple:

- Model Deployment: Upload your machine learning model on the PoplarML platform.

- Deploy settings, such as API endpoints and scaling.

- One click to deploy: on clicking the deploy button, the deployment process is initialized.

- Real-time inference: undertake live inferences with the pre-built REST API endpoints.

For best practice, ensure your data set is properly preprocessed and model is chosen as most proper for the job at hand. An easy-to-understand user interface allows easy going through and operating for new people in this sphere.

How PoplarML Works

PoplarML works with advanced algorithms and state-of-the-art technology to deliver a complete solution for any ML-related task. Several preprocessing techniques are used to ensure that the input data is free of anomalies. The platform supports a vast library of ML algorithms through which users are able to choose, train, and fine-tune until they get the best model. The common workflow goes through different stages such as data preprocessing, selection of model, training the selected model, evaluation using the model, and deploying the model.

PoplarML: Pros and Cons

Advantages:

- Ease of deployment of production-ready ML systems

- Real-time inference

- Support for several ML frameworks

- Scalability as demands become bigger and bigger

- Good user interface with a remarkable simplicity of documentation

Possible disadvantages:

- Documentation is still under development and may be a barrier to entry for most new users.

- Price Tiers: very little information exists beyond a freemium model.

User feedback generally points out the ease of use of the tool and how quickly a user can get it up and running, but some of them have underlined the lack of detailed documentation.

Conclusion

PoplarML is an extremely powerful tool to deploy scalable and production-ready ML systems. Easy-to-use, real-time inference ability, and multi-framework support make it incredibly useful for a data scientist, an ML engineer, or even DevOps. Drawbacks, such as the stage of completeness of its documentation, still see the said benefits supersede the limitations of the platform. With the probabilistic prospect for PoplarML to evolve, it has to introduce even more features and improvements, thus convincing its dominance in the AI/ML deployment space.

PoplarML FAQs

What is PoplarML?

PoplarML is an AI tool that makes it easy to deploy production-ready, scalable ML systems with minimal engineering effort.

Is PoplarML framework agnostic?

Yes, PoplarML trains models on multiple frameworks like TensorFlow, PyTorch, and Jax.

What key benefits does PoplarML provide?

It has an easy-to-use interface for deployment, inferences in real time, the scalability features needed, and a user-friendly interface.

How do I deploy a model using PoplarML?

Using PoplarML: Model deployment is as simple as uploading it on the platform, configuring settings, and then click on deploy.

Which are the industries that can take the help of PoplarML?

PoplarML could be of immense help to the health, finance, and retail industry for their data-driven operations.

How much does PoplarML cost?

PoplarML has a freemium cost model. It means the basics are available free of cost, but the best one will cost according to the plans.