What is XLNet?

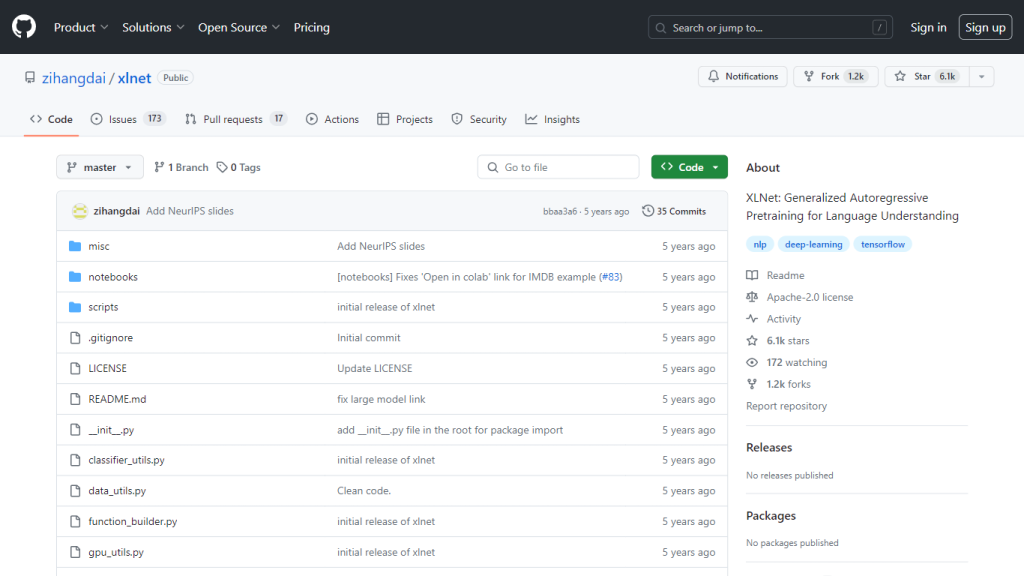

XLNet refers to a radically new, unsupervised pretraining algorithm for natural languages developed by the authors Zhilin Yang, Zihang Dai, and their colleagues. Their paper presents a generalized autoregressive pretraining method that puts up a new state-of-the-art result across a wide array of language understanding tasks. As the immediate successor to the BERT model, XLNet retains the architecture of the previous model within the Transformer-XL model, which is very adept at dealing with long-range dependencies in text. A GitHub repository, maintained by Zihang Dai, houses the model and its supporting code, thereby enabling researchers and AI practitioners to use and contribute to the growing pool of language models.

Key Points of XLNet & Its Benefits

Generalized Autoregressive Pretraining—This superior method enables learning with better quality in unsupervised language representation.

Transformer-XL Backbone—It uses the Transformer-XL architecture for its ability to do better in long-context tasks.

State-of-the-Art Results—It has been showing the best performance in multiple benchmarks for language understanding.

Application in Diverse Fields: The model works for a wide range of tasks, from question answering to sentiment analysis.

Open source of GitHub repository: Proper Maintenance will increase over time as the XLNet GitHub repository remains open for public contribution further. These are a few of the major points while using XLNet. Not just it is outperforming many existing models, even including BERT, on many benchmarks, but also highly versatile in application, and an active developer community continuously improves.

Applications of XLNet

XLNet can be applied in a wide range of contexts, including the following:

-

Question Answering:

One manipulates its advanced language understanding, thereby making it possible to place XLNet properly so that it generates the right answer from a given text. -

Sentiment Analysis:

It can efficiently figure out sentiment in complicated text data since XLNet processes long-range dependencies.

This means that industries that will benefit from XLNet include customer service, where accurate sentiment analysis can improve customer interactions, and information retrieval sectors where question answering systems are very paramount. Most natural are the results in this respect the cases where XLNet has shown improvement over BERT in 20 different NLP tasks and has established state-of-the-art 18 on them.

How to Use XLNet

With the following steps, you can get started on XLNet:

- Go to this XLNet repository, operated by Zihang Dai, on Github.

- Download the model and supporting code from the repository.

- Follow through with the setup documentation to enable the running of XLNet. Among the useful tips and best practices in this regard are that one needs to adequately understand the architecture of Transformer-XL so as to be able to fully borrow from it and engage with the community for new updates and improvements.

How XLNet Works

It is based on a generalized autoregressive pretraining method. The architecture used by XLNet is Transformer-XL, which is particularly catered to long-range dependencies in the text. In this way, XLNet works quite well in most of the language understanding tasks. Typically, this is done for large pre-trained datasets and fine-tuned on specific tasks, for example, question answering, or sentiment analysis.

Pros and Cons of XLNet

The advantages of XLNet include:

- It outperforms the previous models, including BERT.

- Long-range dependencies are possible due to architecture inherited from Transformer-XL.

- Active community gives constant updates of the Github repository.

The main drawbacks can be a model complexity—it needs a lot of computational resources for training and fine-tuning. Most of the user feedback praises XLNet for its performance but comments that one needs robust hardware to fully utilize it.

Conclusion about XLNet

In a nutshell, XLNet is one of the new breeds of language models which very significantly advance the state-of-the-art in unsupervised language representation learning. This feature is rather special because it adopts the Transformer-XL architecture, which can natively handle the long-range dependencies of text. The operations occur in a very broad range of applications and offer state-of-the-art results for many benchmarks; therefore, this actually turns out to be a very impressive tool for an AI developer. Future work, bound to enhance these promising initial results together with community contributions.

XLNet FAQs

-

What is XLNet?

XLNet is unsupervised, making it a method for pretraining deep bidirectional transformers. -

Which technology has been adopted in the architecture of XLNet?

It’s based on a model architecture advanced from Transformer-XL, which enables it to be very powerful at tasks requiring long-range context. -

Where can I get technical details regarding XLNet?

Technical details are explored in papers such as ‘XLNet: Generalized Autoregressive Pretraining for Language Understanding’ by Zhilin Yang, Zihang Dai, et al. -

Is There an XLNet GitHub Repository?

Yes, the Zihang Dai repository contains all of the code to work with and contribute to XLNet. -

How does XLNet Performance Compare to BERT?

XLNet has thus far been better than BERT on 20 tasks, of which it is state-of-the-art at the time of writing.