What is SantaCoder?

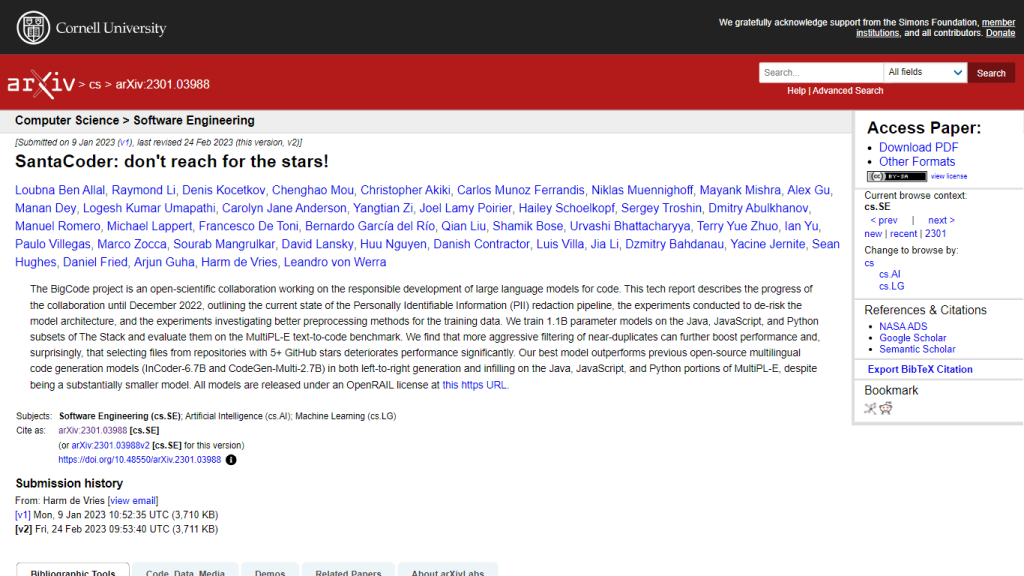

SantaCoder is an innovative project described in a technical report that bears the title “SantaCoder: don’t reach for the stars!”, and was released on the arXiv platform with the identifier [2301.03988]. An effort under the BigCode project, this features 41 authors. The primary objective of the team is responsibly developing large language models that can be applied to coding applications. The submitted report is on progress until December 2022, and it has many features highlighted in the pipeline for PII redaction, experiments for model architecture refinement, and advanced preprocessing methods of training data.

Key Features & Benefits of SantaCoder

Detailed Feature List

- Performance Optimization: Aggressive filtering of near-duplicate data enhances model performance.

- Surprising Insights: Models that get trained on repositories with fewer GitHub stars outperform others with more stars.

- Benchmark Achievements: This model outperforms larger models like InCoder-6.7B and CodeGen-Multi-2.7B inside the MultiPL-E benchmark for text-to-code.

- Inclusive Collaboration: The effort of 41 authors to take AI for coding one more step further.

- Open Science: All models are released under the OpenRAIL license. This allows the reader to realize full transparency, enabling users to access the models.

Benefits of Using SantaCoder

- Improved performance of the model through state-of-the-art preprocessing methods.

- More appropriate outputs of code generation tasks through its enhanced model architecture.

- Open access to models encourages community involvement and further research.

Unique Selling Points

- Scores higher on many benchmark tests compared to bigger models despite its small size.

- Designed with a view toward responsible AI, keeping data privacy intact with PII redaction.

- Collaborative and transparent development process with several experts involved in the development process.

Use Cases and Applications of SantaCoder

Specific Examples

SantaCoder could be used in generating code automatically, code completions, and intelligent code suggestions. Capabilities that can ease the software development process by a large margin.

Industries and Sectors

Some industries that can be targeted with SantaCoder include Software Development, IT Services, Education, and Research. Normally, any company working on the development of software tools and platforms leverages the benefit of SantaCoder in enhancing the capabilities of its products and services.

Case Studies or Success Stories

Although case studies or success stories were not specified, performance benchmarks prove that SantaCoder can have many real-world applications and perform well in most of them.

Using SantaCoder

Step-by-Step Usage

- The models are available under the OpenRAIL license on the URL given by the BigCode project.

- Add the model to your development environment.

- Your code dataset should be preprocessed as instructed.

- Code Completion, Code Generation, or Code Suggestions – provided by the model.

Best Practices and Tips

- Near-duplicate data should be aggressively filtered out for high performance.

- One should not be very dependent on the repositories with higher GitHub stars when selecting the training data.

UI and Navigation

The model can be integrated into a great many development environments. The user will interact with the models using standard code interfaces and APIs, respectively.

How SantaCoder Works

Technical Overview

SantaCoder is built on top of a large language model architecture, specifically trained on Java, JavaScript, and Python codebases. The model has 1.1 billion parameters.

Explanation of Algorithms and Models Used

The training process involves sophisticated algorithms that filter near-duplicate data and then preprocess the training datasets. The performance is benchmarked using the MultiPL-E text-to-code benchmark.

Workflow and Process Description

Data collection, pre-processing, model training, and evaluation form part of the development workflow. Performance metrics combined with feedback used iteratively serve to refine the models.

Pros and Cons of SantaCoder

Pros

- Perform exceptionally well on text-to-code benchmarks.

- Smaller model size and better outcome as compared to larger models.

- Open access through the OpenRAIL license that espouses collaboration within the community.

Cons

- Could be sensitive to the quality of data utilized in training and hence affects performance.

- Preprocessing needs to be cautiously done so as to derive the best results.

User Feedback and Reviews

Though specific feedback from users is not made available, the performance benchmark set by the model and its open access would suggest a great reception among AI and coding communities.

Price of SantaCoder

SantaCoder is based on the freemium model. Access to models is under the OpenRAIL license. It simply means that these models are free to use for innovation and collaboration.

Conclusion about SantaCoder

SantaCoder is a quantum step in AI-based code generation, promising both high performance and openness. The work has been done by a large team of experts in a very responsible and collaborative manner. With superior benchmark performances and open source, SantaCoder will make quite some noise in the AI coding world.

Further Updates and Development

Refining them further in successive updates, besides expanding into more languages, would go hand in hand with close attention to responsible AI practices.

Frequently Asked Questions

SantaCoder

-

What is the BigCode project?

BigCode is a project collaboration to create large language models with responsibility for coding. -

What does the tech report say about the models?

It trains models with 1.1 billion parameters on Java, JavaScript, and Python code subsets and tests on the MultiPL-E text-to-code benchmark. -

What were the major takeaways of the experiments conducted for SantaCoder?

The report found that more aggressive filtering of near-duplicates and/or avoiding repositories with higher GitHub stars improves performance. -

Is the best model resulting from the BigCode project outperforming other open source code generation models?

Yes, the best model of the BigCode project outperforms InCoder-6.7B and CodeGen-Multi-2.7B on MultiPL-E benchmark. -

Where can I find an opensource model of the BigCode project?

They are provided under an OpenRAIL license, available with the hyperlink given.