What is replit-code-v1-3b?

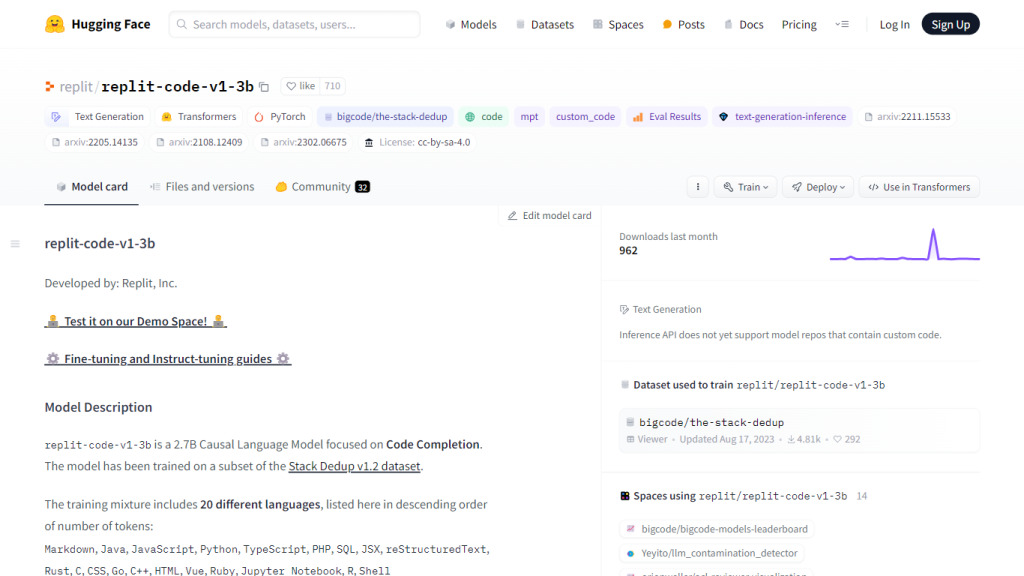

Replit-code-v1-3b is a state-of-the-art 2.7 billion parameter causal language model developed specifically for code completion tasks. This model is hosted in the Hugging Face model hub and was trained on a wide variety of 20 programming languages. It uses advanced innovations like Flash Attention and AliBi positional embeddings, making it fast and accurate for generating code. It was trained with a minimal set of commercial restrictions, making it nearly the closest base model for fine-tuning on specific applications. Besides, it is under the license CC BY-SA 4.0, explicitly targeting open-source collaboration and usage.

Replit-code-v1-3b—Key Features & Benefits

Replit-code-v1-3b is equipped with a wide range of features tailored to enhance the code completion process in the following ways:

-

Model Specs:

It is a 2.7 billion parameter model that aims to assist 20 different programming languages. -

Intended Use:

Open for everyone’s use as a base model for application-specific fine-tuning, with few commercial usage restrictions. -

LLM Techniques:

Flash Attention, AliBi Positional Embeddings, and LionW optimizer, help boost its performance. -

User-Friendly Guides:

Extensive guides about installation, usage, tokenization, and generation are provided to the user. -

License and Credit:

CC BY-SA 4.0 license: Users are required to give appropriate credit, share alike any modifications made and indicate changes.

It can provide benefits such as high accuracy and speed in code completion, supporting a huge array of programming languages, and liberty to tune the model for any specific needs without stringent commercial limitations.

Replit-code-v1-3b Use Cases and Applications

Application of Replit-code-v1-3b can be found in various scenarios across multiple industries as follows:

-

Software Development:

Helps developers write code efficiently and accurately. -

Education:

Provides students with a learning tool in which they can understand and practice coding in many languages. -

Research:

Enables the study of projects and the new development of coding techniques.

This can uniquely benefit a large number of sectors, from tech companies and educational institutions to research labs, by integrating replit-code-v1-3b. This might optimize code completion tasks, saving users a lot of time and reducing errors for better productivity and innovative capability.

How to Use replit-code-v1-3b

The following is a step-by-step guide to using replit-code-v1-3b effectively:

- Install einops, sentencepiece, torch, and transformers packages.

- Download the model checkpoint and vocabulary files from Hugging Face’s model hub.

- Load the model and tokenizer into your dev environment.

- Fine-tune the model to your application if necessary.

- Follow the user guide with full detailed instructions over tokenization and code generation.

This will ensure maximum output; make sure that your environment is well set and following all the instructions in the installation and usage guide.

How replit-code-v1-3b Works

Replit-code-v1-3b works on advanced techniques of machine learning and optimisation. This includes:

-

Causal Language Model:

This model predicts what the next token in a sequence will be, so this model is optimal for code completion. -

Training Dataset:

Trained on Stack Dedup v1.2, a dataset containing a wide variety of programming languages—like Java, Python, JavaScript, and C++. -

Algorithms and Models:

It is powered by Flash Attention and AliBi positional embeddings for better performance and accuracy.

This workflow involves inputting code snippets and passing them through the model to predict the next tokens, then generating full code blocks in accordance with the context provided.

Pros and Cons: Replit-code-v1-3b

Like any other tool, replit-code-v1-3b also has its share of pros and possible cons as discussed below.

Pros:

- Performs code completion with high accuracy and speed.

- It supports a large number of programming languages.

- Open source with very minimal commercial restrictive clauses.

Cons:

- Installation of many dependencies is required.

- Fine tuning might be needed for some applications.

- The initial setup might be complex.

In general, user feedback comments on the model effectiveness in enhancing coding efficiency but points out the initial setup as a minor hurdle.

Conclusion on Replit-code-v1-3b

It is highly advanced, universal, and arguably the best tool in code completion. It further provides enhanced benefits because of its advanced features and multi-programming language support. Since it’s open-sourced with minimal commercial restrictions, it should spark a wide appeal among many developers who are looking forward to enhancing their coding workflows. As the model will further evolve, future updates and community contribution have promising prospects.

Replit-code-v1-3b FAQs

What is replit-code-v1-3b?

Replit-code-v1-3b is a large language model with 2.7 billion parameters, particularly relevant to code completion tasks in many languages.

What dataset is replit-code-v1-3b trained on, and what languages does it support?

It was trained on the Stack Dedup v1.2 dataset and supports languages such as Java, Python, JavaScript, and C++, among many others.

Can one use replit-code-v1-3b for commercial purposes?

Yes, replit-code-v1-3b is supposed to be used under the conditions of a foundational model for application-specific fine-tuning without strict commercial limitations.

What is the license for the replit-code-v1-3b model?

The model checkpoint and vocabulary file are under the Creative Commons license CC BY-SA-4.0; the source code files are under the Apache 2.0 license.

What dependencies are needed to use replit-code-v1-3b?

You will need to install the following libraries for the model: einops, sentencepiece, torch, transformers. Detailed steps are provided in the guide to set up.