What is the Prompt Token Counter?

OpenAI Model Token Counter is one of the essential tools developed to provide effective interaction with a new generation of language models, represented by OpenAI’s GPT-3.5. It is a tool designed to monitor and limit tokens spent on a prompt and a response, keeping it within the model’s required limit. It helps in optimization of communication and pricing due to the fact that it makes effective prompts—preprocessing, counting and adjusting tokens.

Key Features & Benefits of Prompt Token Counter

-

Track Tokens:

Precise token count for prompts and responses to ensure avoidance of excess usage above the limit. -

Manage Tokens:

Efficient handling of tokens for optimized model interactions. -

Pre-process Prompts:

Preprocessing and adjusting the prompts to fit within the allowed token count. -

Adjust Token Count:

Adjusting responses to remain within the bindings of tokens for effective communication. -

Manage Effective Interactions:

Refine the process of creating prompts for better interaction with the model.

With the use of Prompt Token Counter, one can manage tokens effectively and optimize cost while effectively using OpenAI models. Iterative refinement with respect to prompts is its USP—so, developers, content creators, and researchers all profit at one go from this.

Use Cases and Applications of Prompt Token Counter

The Prompt Token Counter is versatile in finding applications in the following cases:

-

Compliance:

During the drafting of queries for use in OpenAI’s GPT-3.5, the tool ensures compliance with token limits by keeping an accurate count of tokens in use. -

Cost Optimization:

Proper management of tokens in use ensures that the user can optimize costs w.r.t. OpenAI models. -

Optimizing the Creation of Prompts:

The tool will help fine-tune and readjust prompts iteratively within the token limits to convey the message perfectly to the model.

It will, therefore, be very instrumental to industries such as artificial intelligence development, content creation, and academic research. For example, AI developers can make sure that their applications never go above the allowed token limit; for the content creator, one gets assistance in coming up with concise prompts; for researchers, they are better placed and equipped to handle their interactions with the model.

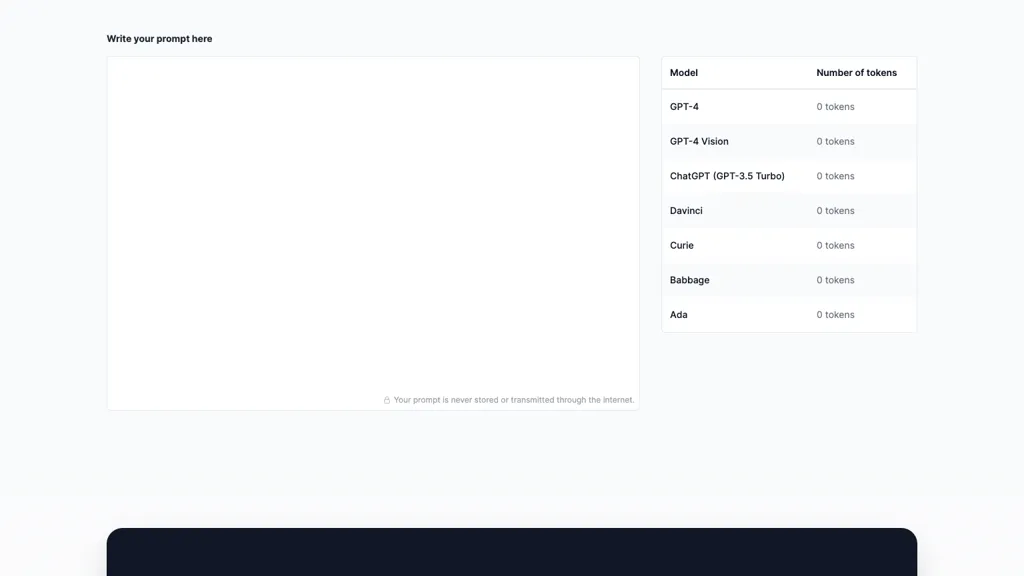

How to Use Prompt Token Counter

The Prompt Token Counter is easy to use:

- Drag and drop it in the tool. The tokens will then be counted in your prompt automatically.

- Adjust it if necessary: Refine and adjust your prompt in case the count of the token goes over.

- Iterate: Keep repeating this process until your prompt can be allowed within the token count.

Knowing the bounds and how tokenization works will provide the best results. The user interface is designed to be intuitive, easy to navigate, and manage the use of tokens.

How Prompt Token Counter Works

Prompt Token Counter uses complex algorithms to tokenize and count tokens in a prompt. Technically:

-

Tokenization:

Any input text on its own gets broken down into individual tokens under the tool based on the model’s rules for tokenization. - It returns the total token count in the prompt.

-

Adjustment Mechanism:

Any modifications made by a user in the prompt will be instantly visible.

Therefore, this workflow will ensure that the users can efficiently keep track of their prompts and responses, always keeping the interactions within the OpenAI models’ token limits.

Prompt Token Counter: Pros and Cons

Below are pros and cons of the Prompt Token Counter:

Pros:

- Guides token consumption and its optimization.

- Avoids overspending tokens hence saving money.

- Constructs short and concise prompts.

Cons:

- Requires a learning curve in most instances, for new users.

- Calls for understanding tokenization and the number of tokens purchased.

Most users will say that this tool comes in handy when trying to fine-tune their interactions with OpenAI models; however, some of them comment on the initial difficulty in understanding these limits of tokens and tokenization.

Conclusion on Prompt Token Counter

That is, a developer using OpenAI language models absolutely needs to have the Prompt Token Counter. This provides robust features for tracking and managing used tokens, optimizing costs, and ensuring effective model communication. It facilitates the construction of prompt concise texts and avoids excess at the limit imposed on tokens. This makes it a resource worth mentioning among developers, content creators, and researchers. It is a tool that, with future developments and updates, will continue improving upon to be more user-friendly and efficient.

Prompt Token Counter FAQs

Here are some of the frequently asked questions related to the prompt token counter:

-

What is GPT-3.5’s maximum token limit?

GPT-3.5’s maximum tokens are 4096 tokens. This includes the input and the output. -

How do I count my prompt’s tokens?

Just paste your prompt in the Prompt Token Counter; it will do the count itself. -

Can this tool help reduce costs?

It can help in cost optimization with OpenAI model usage by effectively managing the consumption of tokens and preventing overflows of the token limit.