What is Phi-2?

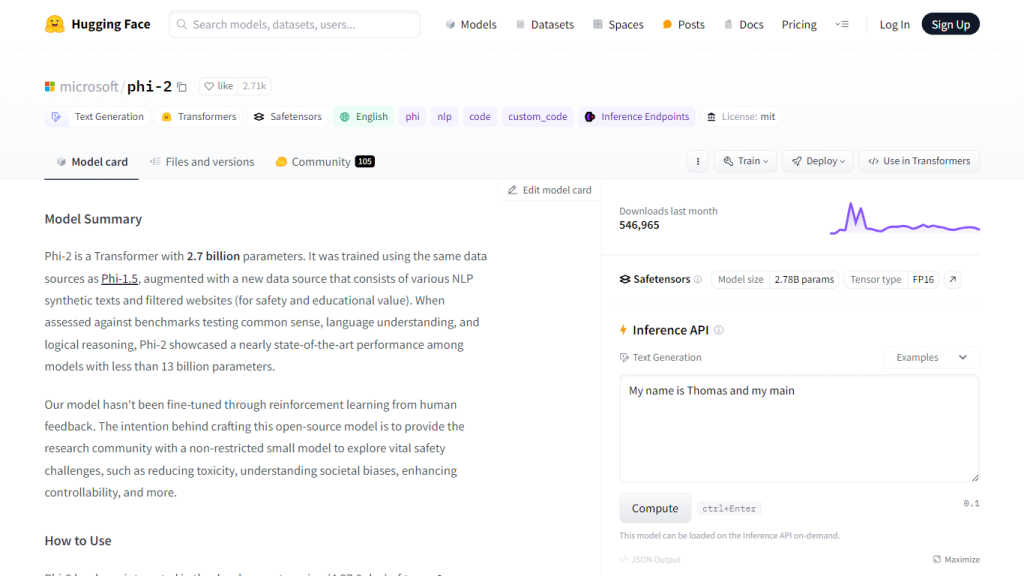

Devised by Microsoft, Phi-2 stands for the most advanced model of Transformers with a parameter count exceeding 2.7 billion. The highly advanced AI model is deployed on Hugging Face and rigorously trained on a dataset that combined synthetic NLP texts and filtered Web sources.

It also set a new benchmark in common sense, understanding of the language, and logical reasoning for AI models in its class. Its main area of application is the generation of text in English; hence, it is very useful in natural language processing tasks and even in coding.

Phil-2 Key Features & Benefits:

-

Model Architecture:

Phi-2 is based on a Transformer architecture with 2.7 billion parameters, which has proven to perform pretty robustly in language understanding and logical reasoning tasks. -

Versatile Applications:

Suitable for question answering, chat, and code formats make this a versatile tool for a wide range of prompts. -

Open Source:

The model is under the permissive MIT license, promoting open science and democratization of AI. -

Integration:

It can easily be integrated with any release of the transformers library.

Among several advantages of using Phi-2 are generating high-quality text, improving language understanding, and reasoning. It is open source, thus contributing and further developing AI research within communities.

Use Cases and Applications of Phi-2

The Phi-2 model has several use cases and applications. Among them are the following:

-

Question Answering:

Relevant and informative replies to user questions. -

Chatbots:

Making customer interactions more intelligent and conversationally engaging. -

Code Generation:

Providing developers with code snippets or solutions to problems that they may be having in their coding. Its integration into customer support, education, or even software development would bring noteworthy improvements in workflow.

This has been proved in various case studies which were conducted and returned very positive results in implementing more efficient customer support systems and in assisting educational content generation.

How to Use Phi-2

To be able to use Phi-2, one needs to integrate it with the development version of the transformers library. Here are the setup instructions:

- First, install the correct version of the transformer library — 4.37.0.dev.

- Set trust_remote_code=True in your config.

- Start using Phi-2 in any application that interests you—QA, chat, or code generation.

The system outputs should usually be refined and verified by the user, more so if it’s code or a piece of fact. One has to be very vigilant to filter for accuracy and weed out bias.

How Phi-2 Works

It has a Transformer-based architecture, and it comes with 2.7 billion parameters, hence very suitable for language understanding and logical reasoning. It was trained on a huge dataset of 250 billion tokens using 96xA100-80G GPUs within two weeks, proving the technical capabilities of the model.

It’s a mix of synthetic NLP texts and filtered web sources to allow balanced and comprehensive learning. Therefore, this broad training will help PHI-2 produce excellent text outputs, perform well on several NLP benchmarks, and achieve quality output.

Pros and Cons of Phi-2

In the same way that any advanced AI model may have its advantages and disadvantages, so does Phi-2. Following are the significant ones:

Pros

- High-performance language understanding with logical reasoning.

- Versatile applications in QA, chat, and code generation.

- Open source under the MIT License, hence community driven contributions.

Disadvantages

- Generates incorrect code or factually wrong information.

- Generated output may have societal biases.

- Verbosity in outputs, hence require refinement by the user.

In general, user feedback praises the capabilities of Phi-2 but also cautions about careful verification of outputs in professional or very critical applications.

Conclusion about Phi-2

In a nutshell, Phi-2 is a milestone in the line of artificial intelligence. The model has a robust architecture and is powerfully trained for a variety of applications in NLP and coding. Though there may be a level of inaccuracies or biases that a user should look out for, its benefits far outweigh its limitations.

With the rapid development in the field of AI, further updates to Phi-2 or newer versions can be expected in the near future, making it more capable and hopefully having many more real-world applications.

Phi-2 FAQs

What is Phi-2?

Phi-2 is a Microsoft Transformer model with 2.7 billion parameters and is good at common sense, language understanding, and logical reasoning.

What purpose is the Phi-2 supposed to serve?

This is best suited for question answering, chat, and code formats and is readily integratable with the transformers library.

What are the limitations of Phi-2?

Off-course, even with state-of-the-art capabilities, Phi-2 might produce wrong code or even factual information. The user should regard the output as a suggestion and validate its correctness.

Has Phi-2 been fine-tuned?

Phi-2 has not seen any fine-tuning with human feedback, but is readily available for integration into the development version of transformers.

Is Phi-2 available for open-source use?

Yes, Phi-2 is under the MIT License and welcomes open-source contributions and development in AI research.