What is PaLM-E?

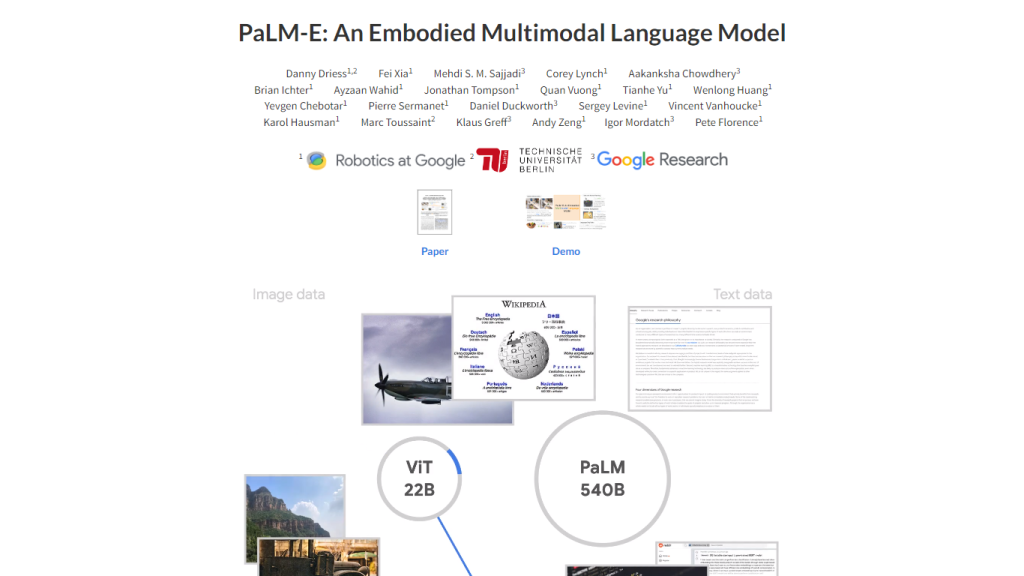

PaLM-E defines this new Embedded Multimodal Language Model, which seamlessly merges sensor information from the physical world with language models for accomplishing advanced AI-driven robotic tasks. As projected in the name, “Projection-based Language Model Embodied” naturally marries textual input with continuous sensory information, such as visual and state estimation. By coupling these together, it allows a full understanding and interaction with the world.

Designed to support a wide range of applications, physical manipulation planning represents the tip of the iceberg with regards to the potential for such massive multimodal LMs. In settings where they will experience a similarly broad distribution of behavior across task domains. Of all the models released as part of this project, PaLM-E-562B is both the largest, with 562B of parameters, and most remarkable, not only reaching state-of-the-art in tasks expecting robotic manipulation and spanning modalities of input by virtue of its large-scale multimodal context, but also at achieving state-of-the-art performance in visual-language tasks like OK-VQA while keeping strong general language skills.

Major Features and Benefits of PaLM-E

Key Features:

- End-to-End Training: Sensor modalities are combined together with text within multimodal sentences; training is performed jointly with a pre-trained large language model. General multimodal capabilities, embodied by the ability to address different real-world tasks by fusing vision, language, and state estimation. Works over several observation modalities: several types of sensor inputs, versatile over multiple robotic embodiments.

- Positive Transfer Learning: Benefits from training across diverse language and visual-language datasets.

- Specialization and Scalability: The PaLM-E-562B model has been specialized more for performance on visual-language tasks while retaining broad language capabilities.

Using PaLM-E has the following benefits: advanced performance of complex robotic tasks, improved interaction with the physical environment, and improved performance in visual-language tasks. Its unique selling proposition is that it easily integrates various sensor modalities and adapts to different robotic forms, making this tool very versatile in almost everything.

Use Cases and Applications of PaLM-E

PaLM-E can be applied across a vast range of use cases, including but not limited to the following:

- Robotic Manipulation Planning helps robots plan the execution of complex manipulation tasks in dynamic environments.

- Visual Question Answering supports robots in making sense of and formulating accurate responses to their surroundings through visual queries.

- Captioning generates a descriptive caption for images or scenes, thus improving understanding and communication.

This will include manufacturing, healthcare, logistics, and service robotics, among many others. With this, the adaptability and versatility of the model make it a very valuable asset for any industry requiring advanced robotic interaction and language understanding.

How to Use PaLM-E

There are various steps and best practices that can be followed while using PaLM-E in order to leverage it the most:

- Initial Setup: Connect and set up the needed inputs of sensors and hardware.

- Integration into Current Systems: Integrate PaLM-E into your robotic systems and their current software infrastructures.

- Training and Calibration: Train the model on your tasks using the provided data and retrain it for the best performance in your environment.

- Performing Tasks: Deploy a model to execute desired tasks, such as manipulation planning or visual question answering.

- Observation and Refinement: Observe the further performance of the model and fine-tune functionalities to capture better accuracy and efficiency.

The interface and navigation of the PaLM-E system are such that users will readily be able to tap into and use all the powerful features of this application with ease for an easy user experience.

How PaLM-E Works

The architecture behind the technology used in the making of PaLM-E is a very advanced technical stack, which includes multi-modal sensor inputs along with a pre-trained large language model. The concept is briefly explained below in some technical detail:

- The Underlying Technology: It integrates continuous sensory information from perceptual scenes obtained in the visual and state estimation, textual inputs into one whole model that provides comprehensive meaning of the situation.

- Algorithm and Model: Advanced algorithms and machine learning models used to process and interpret multimodal data.

- Workflow and Process: The workflow operates on collecting data, integrating it, training it, and deploying the data such that it gives a continuous interactive process between the robot and its surroundings.

Pros and Cons of PaLM-E

Much like with any technology, the Pros and possible Cons of PaLM-E are as follows:

- Pros include the advanced performance in complex robotic and vision-language tasks; flexibly integrated with various sensor modalities and robotic forms; and the good positive transfer learning benefits derived from such diverse training datasets.

- The cons include steep computational resources during both training and deployment, with possible limitations in certain types of environments or with certain sensor inputs.

Overall, the feedback of users is positive. They notice quite a number of impressive capabilities and versatility, though some users note that resources may be high challenge requirements for running this model.

Conclusion on PaLM-E

In all, PaLM-E is a huge leap forward in the domain of multimodal embodied language models. Its flexibility in fusing multiple sensor modalities with a pre-trained large language model makes the execution of high-level robotic and visual-language tasks efficient. Though the cost regarding computational resources is high, the benefits are way higher than the probable drawbacks. The future scope is likely to upgrade its capabilities even further and make them all useful and resilient in industries where advanced robotic interactions are demanded.

PaLM-E FAQs

-

What is PaLM-E project about?

The PaLM-E project enables robots to reason and perform hard tasks by immersing real continuous sensor modalities into their living environment with language models. -

What is the model designed to do?

The project on the pre-training of PaLM-E-562B, with 562 billion parameters achieve best results on rigorous Vision and Language tasks like OK-VQA with retained flexible language skills. -

What does the acronym PaLM-E stand for?

PaLM-E stands for Projection-based Language Model Embodied, with PaLM being the name of the pre-trained model. -

Does PaLM-E benefit from learning transfer?

Yes, the PaLM-E has achieved positive benefits for transfer learning while learning from the diverse network ranks of the Internet-scale language, vision, and visual-language domains. -

What can PaLM-E do; what kinds of tasks or capabilities has PaLM-E been trained for?

PaLM-E has been trained for tasks such as robotic manipulation planning, visual question answering, and captioning.