What is Open Pre-trained Transformers?

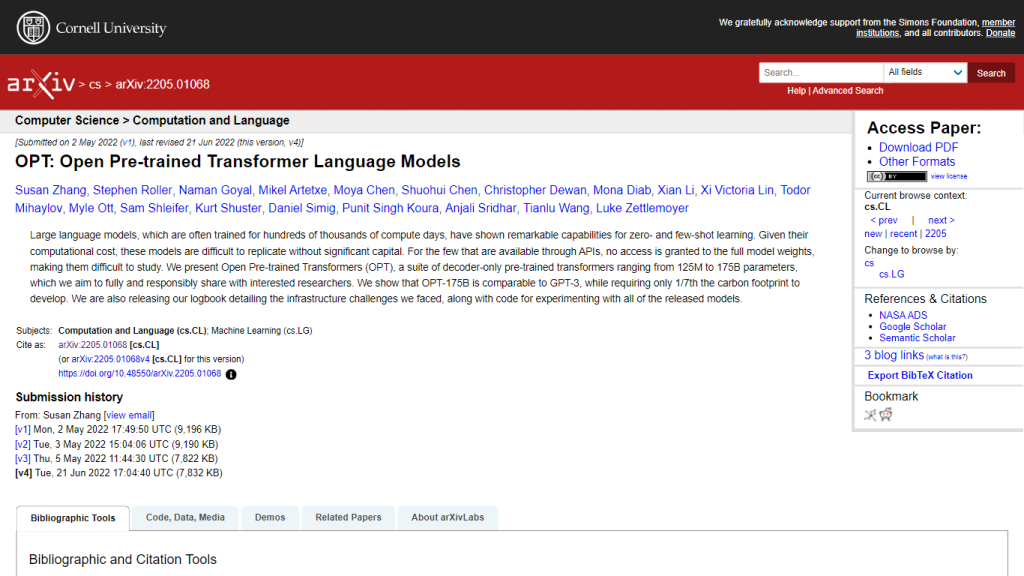

On the low end, Open Pre-trained Transformer models range from 125 million to 175 billion parameters. The reason is that they have been developed with a top end in zero- and few-shot learning and have demonstrated actual capabilities in a wide array of language-related tasks. This is supposed to be a very simple and inexpensive parallel to large-scale language models like GPT-3. Most of them need considerable computational resources to run locally.

One of the noticeable characteristics of OPT is that it leads to a smaller ecological impact during its development. It consumes only about one-seventh of the carbon footprint when compared to GPT-3. The developers of OPT have committed to sharing the models fully and responsibly, not just sharing the model weights but also a detailed logbook of the development challenges and code necessary for experimentation.

Key Features & Benefits of OPT

High-Capability Models: OPT models work very well on zero- and few-shot learning.

All Available Model Sizes: The OPT suite ranges from 125 million to as large as 175 billion parameters.

Fully Transparent: It opens up full model weights and development details to the research community.

Greener Development: OPT requires a significantly lesser carbon footprint compared to models like GPT-3.

Released Resources: A detailed logbook along with code is provided for researchers.

OPT Use Cases and Applications

OPT models can be applied to many scenarios, more precisely where zero and few-shot learning is advantageous. Some examples of the use cases are as follows:

- NLP Tasks: generation, translation, and summarization.

- Customer support systems that need comprehension and generation of replies from the customer in a humanlike response.

- Content creation tools to aid writers through suggestion and drafting of text.

- Academic research: Such language models will be able to digest and make sense of data.

Technology, health, finance, and education industries benefit from OPT model power. Case studies and success stories have also been cited which show their effectiveness and efficiency in practical applications.

How to Use OPT

Several steps are involved in the usage of OPT models, as indicated here:

- Access the Models: Download the model weights and necessary code from the official release.

- Set up the environment: Ensure that all computational resources and software dependencies are up and running.

- Load this model: Load the model using the code provided into your working environment.

- Fine-tune: You can further tune the model on more data for specific tasks.

- Deploy: Now you have the freedom to implement this model in an application or system and use it in practice.

The tips and best practices included in the documentation may be used for optimal results. One needs to get familiar with the user interface and navigation to exploit the full potential of the model.

How OPT Works

The OPT models are based on advanced algorithms and machine learning techniques. They are based on a transformer structure and concretely on decoder-only pre-trained language models. That is, the structure will let models process and generate human-like text by predicting the next word in a sequence.

The typical workflow is to pre-train the model on a giant text corpus so that it learns patterns and other subtleties of language. It can later use this to perform tasks with very limited additional training data in inference, specifically zero- and few-shot learning. Besides, both the development process and technology itself have been well documented, allowing one to take a close look at the algorithms and models applied.

OPT Pros and Cons

Pros of OPT:

- High performance on language tasks with minimal additional data

- Choices of model sizes to suit different needs and resources

- Transparency in sharing model weights and development details

- Reduced environmental impact compared to other large language models

These potential disadvantages or limitations include:

- High computational requirements for the largest models

- Hardness of finetuning and deployment on specific tasks.

Most feedback and reviews of users suggest that OPT models are very effective and original. Some, however, point out that such models are very resource-intensive.

Conclusion on OPT

In summary, the Open Pre-trained Transformers (OPT) models represent a significant advancement in the field of language models, combining high performance with accessibility and eco-friendly development. Their range of sizes, detailed documentation, and supportive resources make them an attractive option for various applications.

Improvements and updates in the future will only make OPT models more capable and user-friendly. This will definitely keep these models at par with or even better than a plethora of pre-trained language models already in existence.

OPT FAQs

What are Open Pre-trained Transformers?

Open Pre-trained Transformers are a series of decoder-only pre-trained language models for all language tasks, aiming to be shared fully and responsibly with the researcher community.

What is the parameter range of OPT models?

OPT models have ranged from 125 million to 175 billion parameters, catering to different research requirements and computational power.

What is unique about the OPT-175B model?

OPT-175B is one of many available model sizes, and happens to be about the size as GPT-3, but has been particularly remarkable for zero and few-shot learning.

Who are the authors of the OPT paper?

Optimizations were possible due to researchers Susan Zhang, Stephen Roller, Naman Goyal, Mikel Artetxe, and many more who contributed to the development of the OPT models.

How do OPT models compare to GPT-3 for environmental impact?

Another motivation behind developing the OPT models is to obtain large language models with less environmental impact. The OPT-175B model was developed with a fraction of the carbon footprint of GPT-3.