What is Megatron-LM?

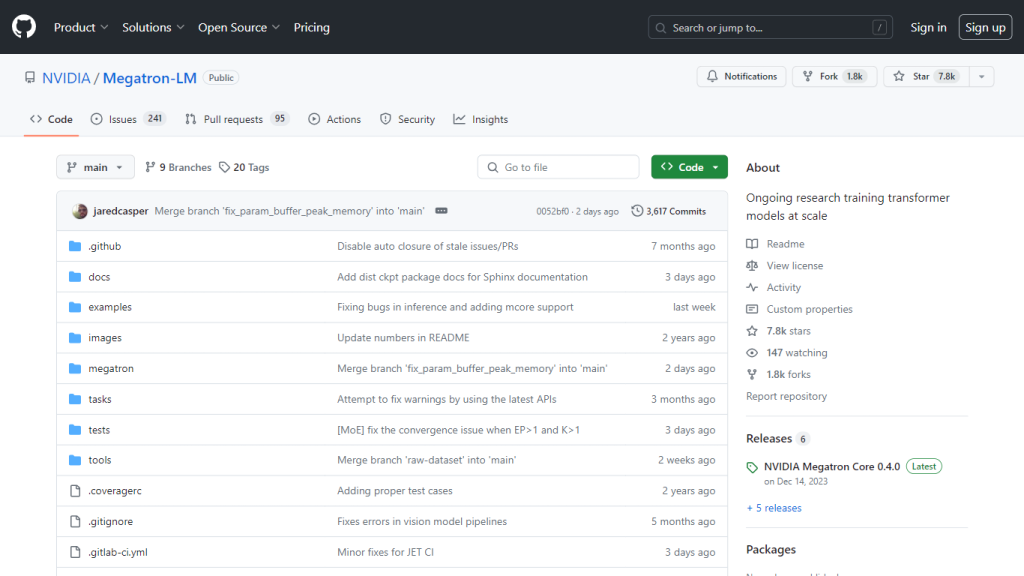

Megatron-LM is a highly complex large-scale transformer model put together by the Applied Deep Learning Research team at NVIDIA. This is a leap in the field of AI and machine learning concentrated on the efficient training of large language models. This project received wide recognition because of its model-parallel, multi-node pre-training capabilities, leveraging mixed precision for better performance. The Megatron-LM GitHub repository is open for collaboration among developers and researchers in jointly innovating to unlock new levels in training state-of-the-art language models.

Key Features & Benefits of Megatron-LM

Large-Scale Training: Trains gigantic transformer models-whether it is GPT alone, BERT, T5, and many more.

Model Parallelism: It supports model-parallel techniques at the cutting edge, including tensor parallelism, sequence parallelism, and pipeline parallelism.

Mixed Precision: It takes advantage of mixed precision to optimize the use of computation resources to their fullest, hence making the overall training process time-effective.

This is also an exceptionally versatile solution offering high performance with projects as disparate as biomedical language models to large-scale generative dialog modeling.

Scaling Studies Benchmark: It shows performance scaling results up to 1 trillion parameters by employing the supercomputer Selene from NVIDIA and A100 GPUs.

Benefits of using Megatron-LM range from unmatched scalability and resource efficiency to a wide array of application capabilities, making Megatron-LM nothing less than an extraordinary tool for AI researchers and developers.

Use Cases and Applications of Megatron-LM

This is manifested in its versatility in application across domains. For example, it has been used to develop biomedical language models which help understand medical literature and also improve health outcomes. In natural language processing, it has been applied in both dialog modeling and question-answering. The various applications presented here do prove Megatron-LM to be capable of diverse and complex tasks, hence robust and adaptive.

How to Use Megatron-LM

Here is how one can get up and running with Megatron-LM:

- Cloning the Megatron-LM GitHub repository

- Installation of the dependencies; use repository documentation on how to install them.

- Parameter setup for training targeted by your needs.

- Run training scripts provided in the repository to fire up model training.

It is highly recommended to follow best practices for monitoring resource utilization and adjusting parameters between different training stages. The user interface-mainly command-line-allows flexible and detailed configurations toward different project needs.

How Megatron-LM Works

The high-performance transformer models are the fundamental technology for Megatron-LM, using some techniques such as tensor parallelism, sequence parallelism, and pipeline parallelism, which in turn enable model parallelism to make large models divisible among many GPUs. Mixed precision further improves the performance by managing computational accuracy with resource efficiency. Workflows consist of data pre-processing, model parameter setting, and then employing the parallelism technique in order to effectively train models.

Megatron-LM: Pros and Cons

Following are some of the strengths and possible weaknesses of the usage of Megatron-LM:

Pros

- Highly Scalable: Can train models up to trillions of parameters.

- Makes efficient use of resources: native mixed precision.

- Can be used in a number of AI/ML applications.

- Open source repository inspires people to collaborate and innovate.

Cons

- This tool requires enormous computational resources. Hence, projects of a smaller size or those teams which do not have access to high-end hardware should look elsewhere.

- Because setup and configuration are so complex, it may be a steep learning curve for the novice. Overall, user feedback points to the powerful capabilities and scalability of Megatron-LM, though some users note the need for great computational powers as a possible Achilles’ heel.

Conclusion on Megatron-LM

In brief, Megatron-LM is a fundamental project within the field of AI and machine learning: due to the model’s ability to very efficiently train large transformer models, it bears high applicability in various fields. While one of the major limitations is high computational power access, possible benefits and advancements with Megatron-LM are huge. And this is not the end, for it will continue to get better and stronger with updates and further development, securing it as one of the leading roles in AI research.

Megatron-LM FAQs

What is Megatron-LM?

Megatron-LM is a large, powerful transformer model by NVIDIA to train big language models at scale.

What does the Megatron-LM repository contain?

It contains projects such as benchmarks, language model training at many different scales, and demonstrations of model and hardware FLOPs utilization.

How does Megatron-LM achieve model parallelism?

Megatron-LM supports tensor, sequence, and pipeline parallelism to achieve model parallelism.

Use cases of Megatron-LM?

Applications include large transformer models in dialogue modeling, question answering, among others.

Which computational resources does Megatron-LM train its models on?

Model scaling studies leveraged NVIDIA’s Selene supercomputer and its A100 GPUs.