What is LLaVA?

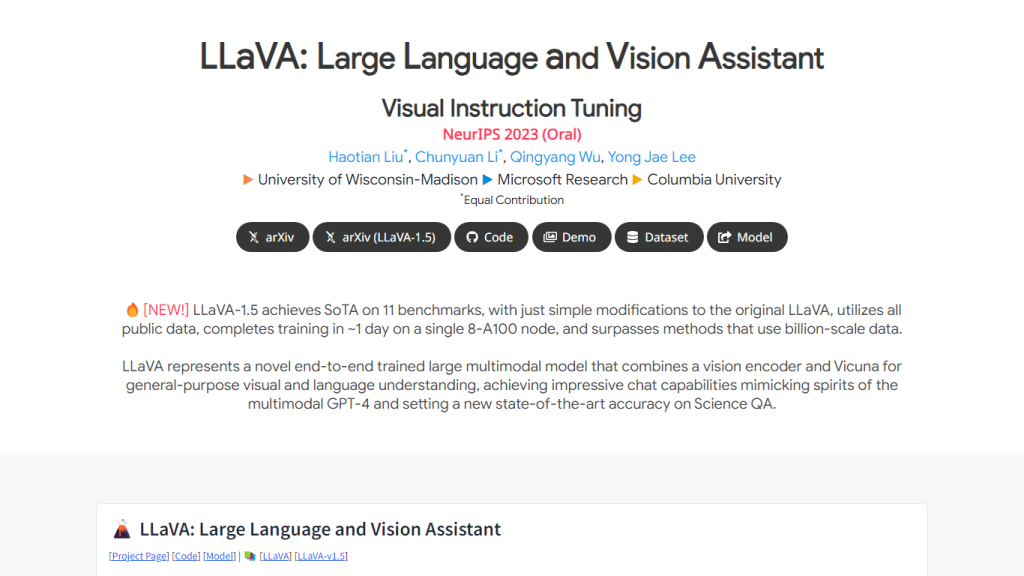

LLaVA, which stands for Large Language and Vision Assistant, is an advanced AI tool that integrates visual instruction tuning to enhance AI performance across various tasks. Featured at the prestigious NeurIPS 2023 conference, this innovative project has been collaboratively developed by researchers from the University of Wisconsin-Madison, Microsoft Research, and Columbia University.

LLaVA’s Key Features & Benefits

- Collaborative Effort: LLaVA is the result of a joint project by renowned institutions, showcasing equal contributions from esteemed researchers Haotian Liu, Chunyuan Li, Qingyang Wu, and Yong Jae Lee.

- LLaVA 1.5 Updated Version: Users have access to the latest improvements and features of the LLaVA model, ensuring they are working with cutting-edge technology.

- Comprehensive Resources: The project offers extensive resources, including arXiv preprints, source code, a live demo, datasets, and the model itself. These tools are invaluable for researchers and practitioners in the AI community.

- NeurIPS Oral Presentation: LLaVA’s recognition and presentation at the NeurIPS 2023 conference highlight its significance and the impact it has in the field of AI.

- Visual Instruction Tuning: This specialized feature enhances the AI’s understanding of visual content in combination with language models, making it highly effective in various applications.

LLaVA’s Use Cases and Applications

LLaVA can be utilized in a range of applications, from enhancing image recognition systems to improving natural language processing tasks. Industries that can benefit from LLaVA include healthcare, where it can assist in diagnostic imaging, and retail, where it can enhance visual search and recommendation systems. Additionally, it can be used in education to develop more interactive and visually engaging learning tools.

How to Use LLaVA

To get started with LLaVA, follow these steps:

- Access the LLaVA resources including the source code, demo, and datasets.

- Download and set up the model according to the provided documentation.

- Familiarize yourself with the visual instruction tuning process to optimize the AI’s performance.

- Integrate LLaVA into your projects and customize it to suit your specific needs.

For best practices, ensure you stay updated with the latest version (LLaVA 1.5) and participate in the community to share insights and improvements.

How LLaVA Works

LLaVA operates by integrating visual content understanding with advanced language models. This is achieved through a process known as visual instruction tuning, which enhances the AI’s ability to interpret and respond to visual inputs. The underlying technology combines sophisticated algorithms and models that have been fine-tuned to deliver high accuracy and performance in various tasks.

LLaVA Pros and Cons

Advantages of using LLaVA include:

- High performance in both language and visual tasks.

- Comprehensive resources and support for users.

- Recognition and validation by the AI community through its presentation at NeurIPS 2023.

Potential drawbacks include:

- The need for substantial computational resources to run effectively.

- Potential learning curve for new users unfamiliar with visual instruction tuning.

User feedback has been largely positive, with many praising its capabilities and the extensive resources provided.

LLaVA Pricing

LLaVA is offered on a freemium model, providing basic access to resources and tools for free, with additional features and support available through paid plans. This pricing structure ensures accessibility while offering value for money compared to competitors.

Conclusion about LLaVA

In summary, LLaVA represents a significant advancement in the integration of language and visual AI. Its comprehensive features, collaborative development, and recognition within the AI community make it a valuable tool for researchers and practitioners. Looking ahead, continuous updates and community contributions will likely further enhance its capabilities and applications.

LLaVA FAQs

Q: What is visual instruction tuning?

A: Visual instruction tuning is a process that enhances the AI’s ability to understand and respond to visual inputs, combining this with language models for improved performance.

Q: How can I access LLaVA resources?

A: You can access the resources, including source code, demos, and datasets, from the project’s website or related repositories such as arXiv.

Q: Is there a community for LLaVA users?

A: Yes, there is an active community of researchers and practitioners who contribute to and support the ongoing development of LLaVA.