What is the GPT-2 Output Detector?

GPT-2 Output Detector is an online tool used to determine whether a text is real or artificially created. It adopts a Roberta model, through which a prediction is indicated across a given text as to whether it is real or fabricated. The user is required to feed text where indicated, in the given text box, after which a probability result is then returned. GPT-2 Output Detector performs perfectly on text containing about 50 tokens.

GPT-2 Output Detector — Key Features & Benefits

Text Input: The input text can be anything you want to analyze.

Text Classification: Classifies the text as either real or fake.

Probability Prediction: It gives back the probability score representing the chances of real text.

Fake Detection: detects the most probable fake text.

Real Detection: detects the most probable real text.

All these combined features offer benefits like improved text verification, improved fake news detection, and robust language model validation. The distinguishing features of the GPT-2 Output Detector are that it is accurate, not user-friendly enough, and unreliable for texts containing approximately 50 tokens.

Use Cases and Applications of the GPT-2 Output Detector

The applicability of the GPT-2 Output Detector is very wideranged; thus, it is of great use in a number of cases:

- Detect Fake News: Users must ensure the authenticity of the news articles and reports.

- Verification of Language Models: Confirm that the various text outputs are given by a language model.

- Text Authenticity Checker: Confirm the authenticity of the text on input.

The tool would find applications in industries and fields involving media, research scholars, or study material organizations and data scientists. Since the verification of his datasets could be done by a data scientist, a researcher could verify the materials for studies, and a journalist could verify the truth of his reports.

How to Use GPT-2 Output Detector

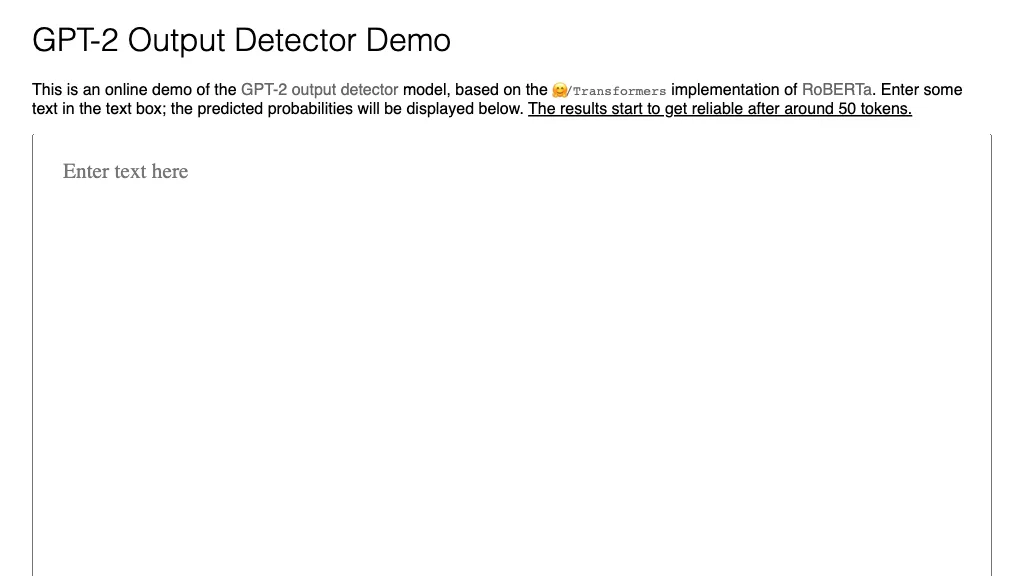

The use of the GPT-2 Output Detector is relatively easy, as summarized in the steps below:

- Go to the GPT-2 Output Detector website.

- Insert your text to be analyzed in the text box space.

- Click on the analyze button space and get the probability result.

This will work best on text containing about 50 tokens. The interface is easy to use, and the result can be obtained in just a few clicks. Best practice refers to text as clear as possible and free of errors to be analyzed more accurately.

How GPT-2 Output Detector Works

A GPT-2 Output Detector is an analysis tool using a state-of-the-art Robusta model from the same family of robust transformers. This model, belonging there, really understands natural language and can process to do so. The user inputs some text and passes it through the model, which gives a score of probability with its result, i.e., whether this kind of text is real or fake. Many involve a process of advanced algorithms that analyze language patterns, context, and other linguistic features to ascertain the product of the text that is inputted.

GPT-2 Output Detector: Pros and Cons

Like any other tool, the GPT-2 Output Detector also comes with some plus points and potential negatives attached to it. These are talked about in the following lines.

Advantages

- High Accuracy: Offers dependable results, especially over the text about 50 tokens in size.

- Ease of Use: The user interface is friendly, thus making it easy to use for text checking.

- Wide Range of Application: Applications extend from fake news detection to academic research.

Possible Drawbacks

- Token Limitations: The detector comprises the most effective operation on text comprising about 50 tokens, meaning that using it for longer text constitutes a constraining factor on what it can be used for.

- Model Dependency: The outputs are reliant on the accuracy and updates of the Roberta model lying beneath.

User reviews often mention that it works really well, and user friendly, with only a few of them mentioning the limitation on the number of tokens as a minor issue.

GPT-2 Output Detector FAQs

What is GPT-2 Output Detector?

It is an online tool to predict real or fake text seen on a Roberta-based model.

What is the GPT-2 Output Detector?

It passes the input text through the Roberta model to assign a probability score about the text generated.

What are optimal text lengths this GPT-2 Output detector should work with?

This is ideal for text up to about 50 tokens long.

For whom should this GPT-2 Output detector be valuable?

The tool will be useful to a few major types of users like data scientists, researchers, and journalists.

Are there any limitations to the GPT-2 Output Detector?

Yes, it works best with text 50 tokens in length and relies on the accuracy of the underlying Roberta model.