What is google-research/bert?

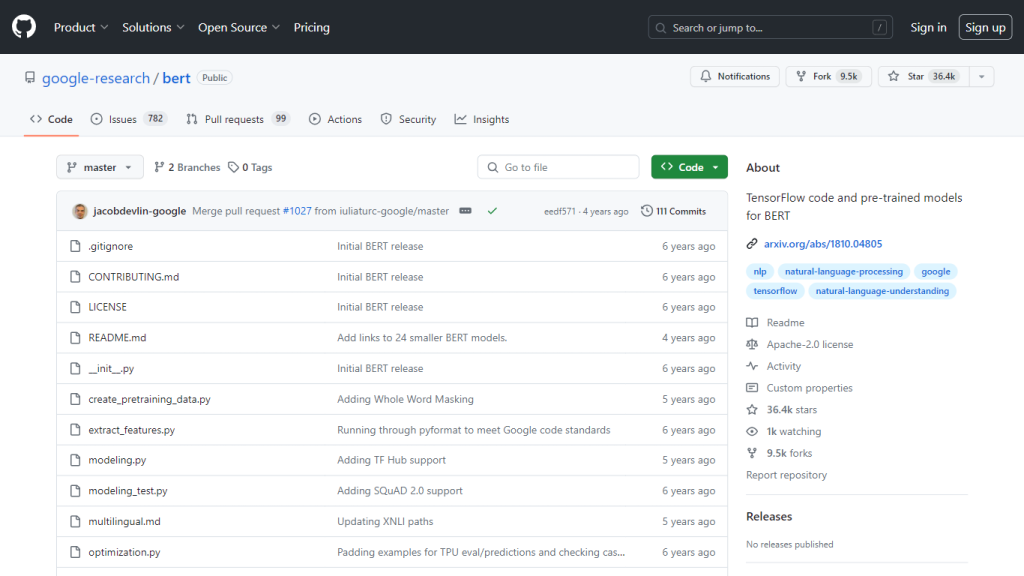

The repository google-research/bert, hosted on GitHub, is among the most important repositories for anyone interested in applying BERT-a model of Bidirectional Encoder Representations from Transformers-and it was developed by Google researchers who, up until now, have brought a real revolution to NLP research by improving the capability of machines in understanding human language more accurately than ever before.

This repository includes the TensorFlow code along with several pre-trained BERT models that make the process of building NLP systems which have the best interpretation of text easier. Applications of BERT range from sentiment analysis and question answering to language inference. Indeed, it turns out to be one of the most powerful tools among developers and researchers.

Key Features & Benefits

-

google-research/bert

-

TensorFlow Implementation:

Complete TensorFlow code for deploying the model is included in the repository. -

Range of Model Sizes:

It includes 24 smaller BERT models, which are certainly apt for lean computational resource environments, offering flexible deployment options. -

Pre-trained Models:

A set of various pre-trained BERT models can be found; these can be fine-tuned to meet the requirements of specific NLP tasks. -

Extensive Documentation:

It is presented with detailed files such as README.md and CONTRIBUTING.md. The repository has in-depth instructions on how to work with it effectively. -

Open-source contribution:

It encourages developers to contribute to BERT’s further development, thereby creating a more interactive community.

The pre-trained models available in this repository can save immense time and computational resources for projects on NLP; therefore, it is highly useful for both academic and commercial purposes.

Applications and Use Cases of google-research/bert

The repository google-research/bert has been applied or can be used for various applications in the following areas:

-

Sentiment Analysis:

To enhance the systems’ ability to identify emotions and their meanings of texts. -

Question Answering:

Improving the ability of machines to understand and answer questions based on the content of a passage. -

Language Inference:

Enhancing the ability of systems to make predictions about relationships among sentences.

These applications could serve industries like customer service, health, finance, and e-commerce very well. For instance, chatbots in customer service can be more articulate, and opinion on something on social media regarding the sentiment analysis tool.

How to Use google-research/bert

-

Clone the repository from GitHub via

git clone https://github.com/google-research/bert

. - Download the pre-trained models using the links in the README file.

- Set up your environment by installing the dependencies listed in the repository.

- This means fine-tuning the pre-trained models for your specific NLP tasks using the TensorFlow code provided.

- You can refer to detailed instructions and best practices in the extensive documentation.

With these easy steps, you will be securing your maximum benefit from the repository and taking advantage of BERT’s advanced capabilities for your projects.

How google-research/bert Works

BERT is based on the transformer architecture and relies on self-attention mechanisms that analyze the text contextualized in a bidirectional way. While older models may have read through the text sequence by sequence, as it were, from both directions, BERT does this to a far greater degree, making its understanding much better-informed.

The model has two major phases: pre-training and fine-tuning. During pre-training, the model learns from an enormous corpus of text; fine-tuning tunes the model to specific tasks with a small dataset.

Workflow:

-

Tokenization:

Text is divided into tokens. -

Embedding:

Tokens are embedded into the numerical representations. -

Transformer Layers:

Data flows through the stack of transformer layers to know about context. -

Output:

Makes predictions based on what data has been processed.

Pros and Cons of google-research/bert

Pros:

- State-of-the-art accuracy in many NLP tasks.

- Pre-trained models save time and resources.

- Open-source nature so the community can contribute.

Cons:

- Computational needs go up with bigger models.

- Steep learning curve.

User feedback generally speaks to the model’s proficiency in comprehension of context and an improvement in NLP tasks, though it requires quite a lot of computational power for applications that demand such intensity.

Conclusion about google-research/bert

The google-research/bert repository is a great source for anyone who wants to enhance the NLP capabilities. It gives a solid foundation for developing high-level language processing systems, with a comprehensive TensorFlow implementation, including pre-trained models and documentation.

In the future, new developments will continue to broaden the capabilities of BERT, making it an increasingly powerful tool in the evolving world of NLP.

google-research/bert FAQs

What is BERT?

BERT is a method for pre-training language representations, which means that some pre-trained representations can be fine-tuned for a wide variety of NLP tasks.

How can I download pre-trained BERT models?

Pre-trained models in the google-research/bert repository can be downloaded via links in the README on GitHub.

What types of BERT models are available in this repository?

There are several variants of BERT models in this repository: BERT-Base, BERT-Large, and then 24 more smaller models, fit for systems with limited computational resources.

Is the source code of BERT open source? Can I contribute to it?

Yes, the source code provided herein, together with the models themselves, is open source under the Apache-2.0 license, and you are invited to contribute to their further development on GitHub.

For whom is the repository google-research/bert on GitHub intended?

It is basically intended for developers and researchers who use it in their projects with NLP and want to give a more advanced feel of language understanding using BERT.