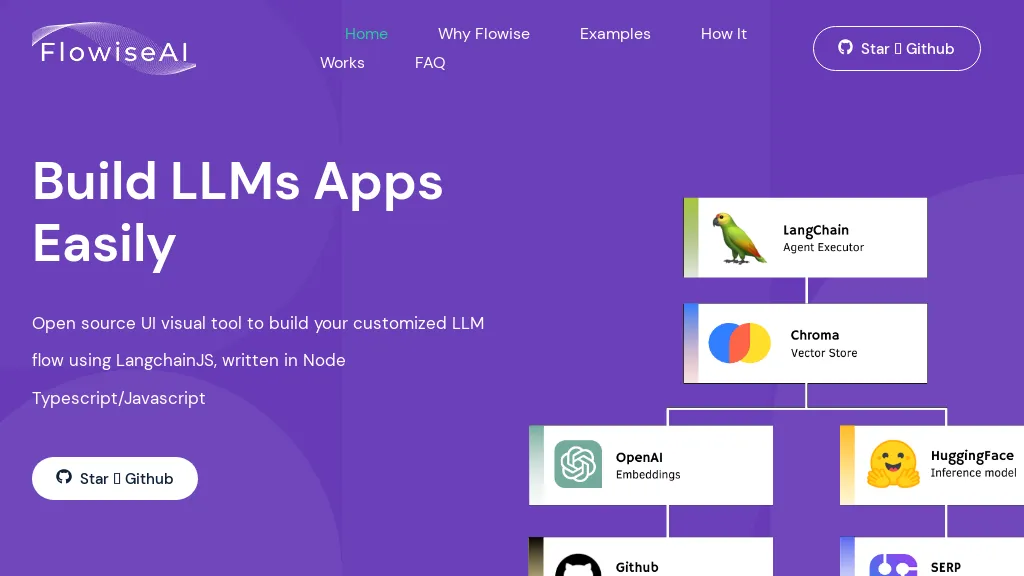

What is Flowise?

FlowiseAI is an open-source UI visualizing tool for helping users create their own custom Language Model flows quickly and easily with LangchainJS. The app was written using Node Typescript/Javascript, making it easy for everyone to develop their own LLM applications. It has features like live visualization of the LLM app, integration with custom components, and a space where one can examine or run through some different LLM examples of chains like QnA retrieval, language translation, and conversational agents with memory. FlowiseAI is open source for commercial as well as personal use, and it leverages Docker to make deployment easy. First, install FlowiseAI and get started to use it with npm.

Key Features & Benefits of Flowise

Here are the key features and benefits of using FlowiseAI:

- Custom LLM Flows: Build your custom LLM flows

- LLM App Development: Develop custom LLM apps in an easy and simple way

- Live App Visualization: View visualization of LLM apps running live

- Integration of Custom Components

- Explore some of the LLM chain examples.

In this platform, developers can utilize features including, but not limited to, fast LLM applications creation, depending on the needs they have. The open-source nature, very easy to be integrated with LangchainJS, and supports Docker—very easy for deployment purposes—are some of the notable USPs of this platform.

Use Cases of Flowise and its Applications

FlowiseAI is quite flexible in its use and could be possibly applied in any of the following applications:

- Building LLM applications for QnA retrieval

- Develop language translation-based LLM applications.

- Develop conversational agents with memory.

Industries and sectors that can benefit from FlowiseAI include software development, AI development, business analytics, and data science. FlowiseAI serves a wide range of potential users, like software developers, DevOps engineers, AI developers, business analysts, and data scientists.

How to Use Flowise

It is quite easy to get started with FlowiseAI. Here is a step-by-step process:

- Install FlowiseAI, using npm.

- Run the FlowiseAI application to begin building your LLM flows.

- In the UI, one can add the different components and set them. Test your LLM App Live In –Tool. Your app is then deployed with Docker to work smoothly.

For this, familiarize yourself with the LangchainJS and go through the different examples of LLM chains given by FlowiseAI. Since its UI is user-friendly, one will not face any problem moving around the same and creating apps.

How Flowise Works

FlowiseAI is developed on a strong technological base with Node Typescript/Javascript. LangchainJS is integrated into the tool for the generation and handling of LLM flows. The users are able to visualize their LLM applications in real-time and make it easier for themselves to monitor and tweak configurations accordingly. This majorly involves component selection and configuration, chaining them together, and running the application for live execution.

Pros and Cons of Flowise

There are a number of benefits of using FlowiseAI:

- Ease of use with a visual interface

- Open-source and free for all users

- Supports Docker, which makes it easy to deploy

- Customizable through the integration of custom components

- Live visualization of LLM applications

However, in this respect, there are also several cons to take into consideration:

- Needs knowledge of LangchainJS for its full effect

- Probably a steep learning curve in using LLM applications for the first time

User feedback is predominantly positive, reflecting on the flexibility and ease of integration as the significant plus sides of the tool.

Flowise FAQs

Q: Can I use Flowise AI for free?

A: Yes, FlowiseAI is free for commercial and personal use.

Q: Does it involve any new technologies and how?

A: FlowiseAI has been developed using Node Typescript/Javascript with LangchainJS.

Q: How to deploy my applications on FlowiseAI?

A: One can easily deploy applications developed over FlowiseAI with Docker.

Q: Who can use FlowiseAI for his benefit?

A: Software developers, AI developers, business analysts, DevOps engineers, and data scientists.

Q: What are its common use cases?

A: The most common use cases are in a question-answer retrieval application, a language translation application, and a conversational agent with memory.