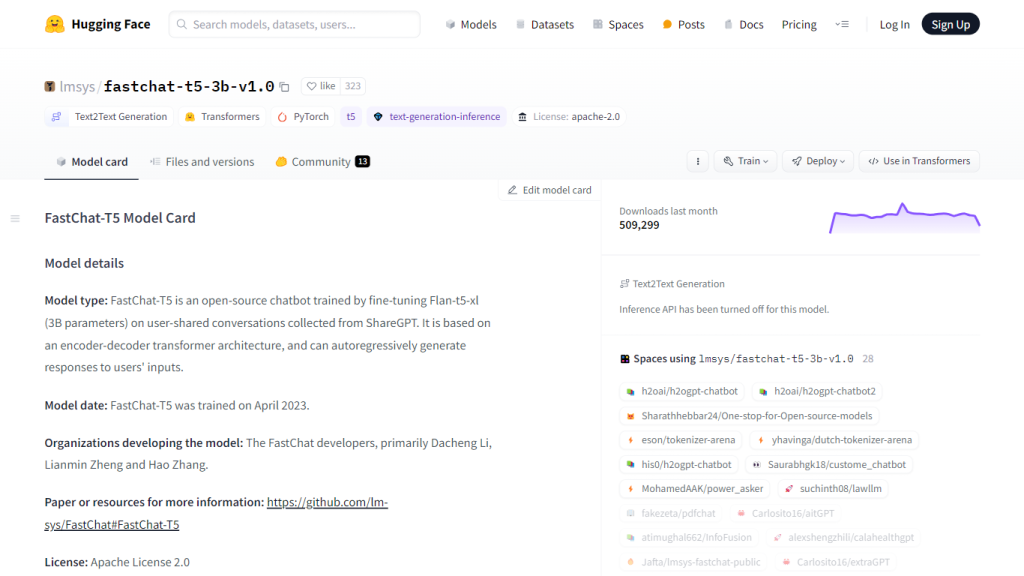

FastChat-T5 Overview

FastChat-T5 is a cutting-edge AI chatbot interaction model living on the Hugging Face platform; it was developed in April of 2023 by the FastChat team. It leveraged an architecture inspired by Flan-T5 with an incredible 3 billion parameters. The model has been fine-tuned on conversations from ShareGPT—a high-value starting point for commercially oriented dynamic responsive chatbots. Also, it helps in assisting researchers in the depths of NLP and ML studies.

Key Features & Benefits of FastChat-T5

Following is some of the key features of FastChat-T5 with the associated benefits which it holds for the field of research and commerce:

-

Model Architecture:

The architecture is of an encoder-decoder transformer from Flan-T5-xl, which makes this model very strong concerning language understanding and generation. -

Training Data:

Trained on a very diverse dataset, with around 70,000 conversations collected from ShareGPT, which enables it to handle a wide range of prompts and queries. -

Development Team:

By a team called FastChat, driven by Dacheng Li, Lianmin Zheng, and Hao Zhang, who ensure the state-of-the-art language processing. -

Commercial and Research Applications:

It will be very useful for entrepreneurs and researchers who are into NLP, ML, and AI. -

Licensing and Access:

It is open-source under the Apache 2.0 License, hence more accessible and collaborative.

FastChat-T5 Use Cases and Applications

The various uses and applications for which FastChat-T5 can be put make it versatile in several sectors:

-

Customer Care:

Construct intelligent chatbots that analyze customer service and support requests with the goal of accelerating response times and boosting customer satisfaction. -

Healthcare:

Virtual assistants can be utilized in providing medical information and assistance to patients, enhancing accessibility to healthcare. -

Education:

Create educational chatbots to support students with learning materials, answering academic questions, and so forth. -

Research:

Apply the model to more advanced NLP and ML themes; this will help open new areas of applications and create novel solutions.

Success stories abound that attest to the effectiveness of the use of FastChat-T5 in production scenarios. A technology startup once used the model on their customer service platforms and was able to record a 30% reduction in response time, with a noticeable increase in customer satisfaction.

How to Use FastChat-T5

As it is designed to be user-friendly, FastChat-T5 is quite easy to get started with. Here’s a step-by-step guide:

-

Access Model:

On the website of Hugging Face, access FastChat-T5. -

Installation:

Since installation instructions are provided on the site, hence it is pretty easy to integrate the model into your application. -

Fine-tuning:

Fine-tune the model on your dataset to improve its performance for your specific use case. -

Deployment:

Deploy the model in your chatbot application and begin engaging with end-users.

Best practices will include periodic updates with newer data to keep relevant and responsive models. Study the user interface and navigate to know how to make the most of its features.

How FastChat-T5 Works

FastChat-T5 is an encoder-decoder transformer architecture that conducts conversations. Such a structure will, therefore, enable the model to interpret the input prompts with efficacy and come up with coherent and contextually relevant responses. It has been trained on a dataset of 70,000 conversations, which lets it capture a wide range of topics and questions.

That would involve a query being inputted, with the encoder needing to understand the context and intent of that query. The decoder would then form a response in a more natural manner.

Pros and Cons of FastChat-T5

Like any other technology, there are a few pros and possible cons of FastChat-T5:

Pros:

- High-quality interactions: fluent and coherent responses to improve users’ experiences.

- Versatility: from applications created in one field to others.

- Open Source: Allows for more collaboration and innovation on the part of the AI community.

Cons:

- Resource Consumption: Uses lots of computing resources in training and deployment.

- Initial Setup: Steep learning curve for end-users who may have limited experience with NLP and ML concepts.

Feedback by users has usually been very positive, and the model responses sound natural and relevant. On the other hand, it is felt that there should be easier access to resources which would aid in setting up the model initially.

FastChat-T5 FAQs

What is FastChat-T5?

The FastChat-T5 model is an encoder-decoder-based Artificial Intelligence chatbot that has been designed to process text input and relay human-like responses.

Who are the developers behind the FastChat-T5 model?

The FastChat-T5 model was developed by the team of FastChat, which included Dacheng Li, Lianmin Zheng, and Hao Zhang.

Which dataset has FastChat-T5 been trained on?

The pre-trained model, FastChat-T5, was trained on 70,000 conversations shared by users within ShareGPT.

What is FastChat-T5 for?

The model is intended for business applications with chatbots and as a starting point to explore natural language processing.

Where should I send questions regarding FastChat-T5?

Questions can be directed to the developers via the following GitHub issues page:

GitHub Issues Page

.