What is GPT-NeoX-20B?

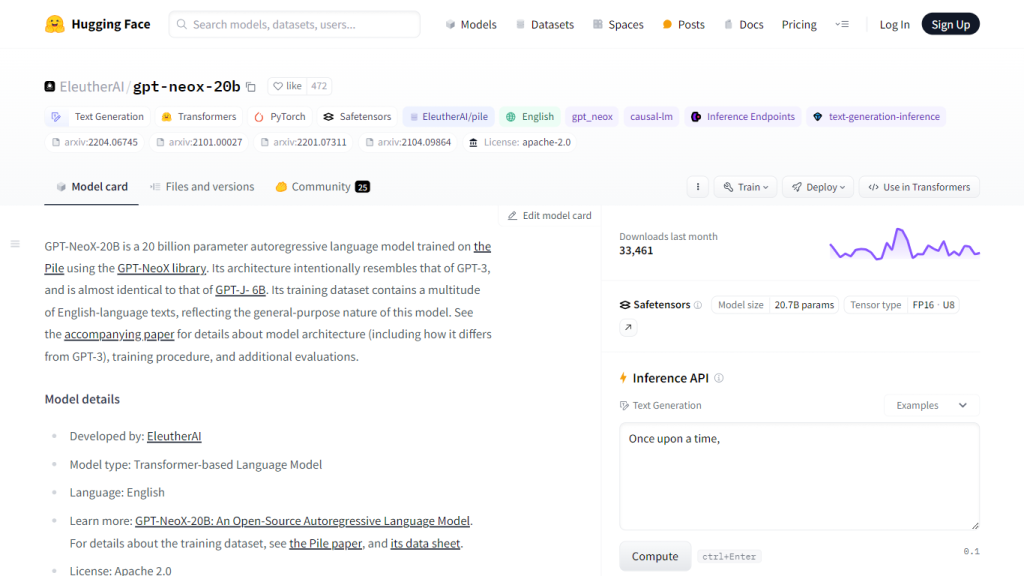

GPT-NeoX-20B is EleutherAI’s 20 billion parameter autoregressive language model and is one of the most demonstrated models on Hugging Face’s platform. This high-caliber AI model is tailored to emulate the OpenAI GPT-3 state-of-the-art—confined to generating text in English. It trains on the enormous, diversified Pile dataset, making it an extremely useful tool for a wide variety of NLP tasks. GPT-NeoX-20B is an open-source project democratizing artificial intelligence and further increasing its availability for researchers and developers. However, the user is cautioned not to overlook its limitations and biases.

GPT-NeoX-20B’s Key Features & Benefits

Model Size: It comes with substantial text generation capabilities with 20 billion parameters.

Training Dataset: Trained on the Pile dataset, it is famous for having huge diversity and vast content variety.

Open Science: Open-source availability and open-science approach to its credit.

Model Accessibility: It can easily be added to the Transformers library for extended functionalities.

Community Support: Active community contributions are channeled through places such as the EleutherAI Discord.

Some of the benefits of using GPT-NeoX-20B include its strength in text generation tasks and its ready accessibility for further innovation, as well as the large community behind EleutherAI.

GPT-NeoX-20B’s Use Cases and Applications

GPT-NeoX-20B can be used thus:, especially in research and development in the domain of natural language processing. A number of specific cases include:

-

Text Generation:

Generating human-like text related to chatbots, content data creation, and others. -

Language Translation:

It can also support the tasks of translation between different languages by drawing on the large quantity of text data it has been trained on. -

Sentiment Analysis:

The sentiment in the text data can be analyzed and understood for market research or customer feedback.

GPT-NeoX-20B can contribute significantly in sectors like healthcare, finance, and entertainment due to its sterling performance in tasks like data processing and automation of text generation.

How to Use GPT-NeoX-20B

Below is a step-by-step process of using the GPT-NeoX-20B model for text generation:

- Install the required libraries, mainly the Transformers library from Hugging Face.

- Load the model via the AutoModelForCausalLM interface:

- For best practice, be sure to further finetune on your specific downstream tasks, and be aware of the ethical concerns of publishing AI Plaza content.

# Directly load the model using Hugging Face Transformersfrom transformers import AutoModelForCausalLM, AutoTokenizermodel = AutoModelForCausalLM.from_pretrained("EleutherAI/gpt-neox-20b")tokenizer = AutoTokenizer

How GPT-NeoX-20B Works

GPT-NeoX-20B is an autoregressive language model, which means generating text one token at a time, basing on the previous tokens. This model is designed according to transformers architecture, meaning that it was especially developed to work with sequential data. The model has been pre-trained on the Pile dataset, which means a vast, diversified collection of English language texts from every possible source. Thus, this huge training provides GPT-NeoX-20B with the ability to understand and create coherent, contextually relevant text.

GPT-NeoX-20B Pros and Cons

The advantages of the model GPT-NeoX-20B are as follows:

-

High-Quality Text Generation:

It generates coherent and contextually relevant text. -

Open Source:

Under Apache 2.0, it is free and hence enables further innovation and research in this area. -

Large Community Support:

Active engagement is there through EleutherAI channels.

Possible disadvantages:

-

Biases:

These are from the training dataset and will carry into some form in the generated output. -

Resource Intensive:

Computationally intensive in terms of fine-tuning and deployment. -

Not Ready for Direct Deployment:

Far from standard deployment with additional fine-tuning required for an application at hand.

User feedback generally puts a highlight on its impressive capabilities but also notes the need for careful handling of biases and ethical considerations.

Conclusion about GPT-NeoX-20B

GPT-NeoX-20B is a very powerful tool with regard to text generation and other NLP tasks; it is also open-sourced, which makes it very helpful to many researchers and a large community of developers built around it. Of course, it has drawbacks—first of all, biases and then computational resources. Nevertheless, its benefits outweigh those drawbacks by far. Further updates will push the boundaries even more in positioning this AI model as truly great.

GPT-NeoX-20B FAQs

-

What is the purpose of GPT-NeoX-20B?

The model is primarily a research tool, and it can be fine-tuned for any particular downstream task. -

Can GPT-NeoX-20B be used in customer-facing products without additional tuning?

No, it absolutely should not be used for unsupervised interaction with humans. -

Was GPT-NeoX-20B trained on a wide variety of text sources?

Yes, it was trained on the Pile dataset, including an extremely wide variety of English-language texts. -

How do I generate text with the GPT-NeoX-20B model?

You can load this model with the Transformers library’s AutoModelForCausalLM. -

What is Pile dataset?

The Pile: A large-scale, English-language dataset pulled from 22 diverse sources; the pre-training dataset for GPT-NeoX-20B.