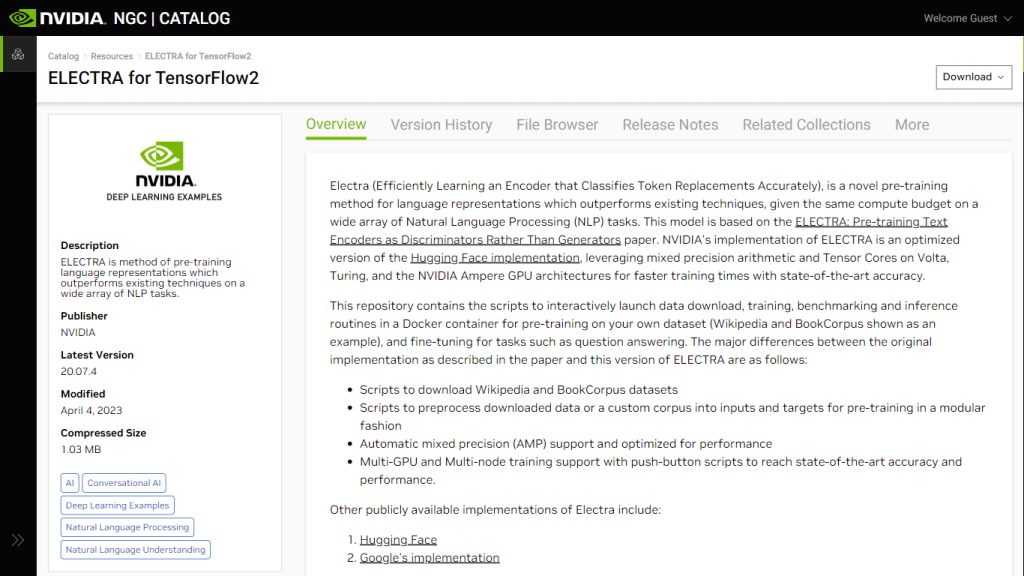

What is ELECTRA for TensorFlow2?

ELECTRA for TensorFlow2, located on NGC, has been a revolution in methods of pre-training language representations for Natural Language Processing. This efficient encoder classifies token replacements and far outperforms the existing methods that are computationally similar on a wide variety of NLP tasks. This model was trained off a research paper, and hence it has huge advantages concerning NVIDIA’s optimizations, including mixed precision arithmetic and Tensor Core utilization on Volta, Turing, and NVIDIA Ampere GPU architectures. Faster training times are realized without any loss of state-of-the-art accuracy.

ELECTRA for TensorFlow2’s Key Features & Benefits

Top Features:

-

Mixed Precision Support:

Mixed precision arithmetic enables faster training on compatible NVIDIA GPU architectures. -

Multi-GPU and Multi-Node Training:

Train on multiple GPUs or across nodes and speed up model development. - Download and preprocessing scripts of datasets for easy setup of the pre-training and fine-tuning processes.

-

Advanced Model Architecture:

Generator-Discriminator scheme, which can learn more effective language representations. -

Optimized Performance:

Optimized for Tensor Cores; leverage Automatic Mixed Precision to train models faster.

Benefits:

- It realizes better training speeds while ensuring high accuracy.

- Easy to use, with provided scripts and tools for setup and running.

- Optimized for modern NVIDIA GPU architectures, hence improving performance and efficiency.

- It supports a wide range of NLP tasks, hence versatile for various applications.

Use Cases and Applications of ELECTRA for TensorFlow2

ELECTRA for TensorFlow2 can be applied in a number of NLP tasks, such as:

-

Question Answering:

One can fine-tune ELECTRA to build models which can understand questions based on a given context and generate an answer. -

Text Classification:

Classify texts into predefined classes with very high accuracy. -

Named Entity Recognition (NER):

Identify the entities within a text and classify them.

Given below are some of the industries which can be powered with ELECTRA for TensorFlow2:

-

Healthcare:

Medical document analysis, creating better management of patients’ data. -

Finance:

The improvement of customer support systems and fraud detection mechanisms. -

E-commerce:

Personalization of customers’ experiences by suggesting better products.

How to use ELECTRA with TensorFlow2: Step-by-Step Guide

- Download an ELECTRA model from NVIDIA NGC.

- Prepare your dataset using the given preprocessing scripts.

- Initiate pre-training adding the –amp flag for Automatic Mixed Precision.

- Fine-tune the pre-trained model on your specific NLP task using the fine-tuning scripts.

- Evaluate and benchmark the model for performance criteria.

Additional Tips and Best Practices:

- Utilize multi-GPU and multi-node setups to speed up the training process.

- Check the training process regularly to adjust all the necessary measures.

- Exploit NVIDIA’s Tensor Core Optimisations for better performance.

How ELECTRA for TensorFlow2 Works

ELECTRA departs from models such as BERT in that it uses a generator-discriminator framework. There, a generator proposes plausible replacements for tokens, and a discriminator tells whether each token in the input sequence is correct or replaced. This adversarial approach—inspired by GANs—lets ELECTRA learn more efficiently.

Technical Overview:

-

Architecture:

Generator-discriminator framework to identify token replacements. -

Algorithms:

Advanced algorithms enhance pre-training and fine-tuning processes. -

Workflow:

Prepare data, train the model, fine-tune for specific tasks, test performance.

ELECTRA for TensorFlow2 Pros and Cons

Pros

- With Mixed Precision and Tensor Core optimizations, it provides faster training times.

- Very accurate on a broad range of NLP tasks.

- It is user-friendly since comprehensive scripts and tools set up and run the process.

Cons

- Full optimizations require compatible NVIDIA GPUs.

- Might prove to be more difficult on the learning curve for users who do not use mixed precision training.

Conclusion about ELECTRA for TensorFlow2

ELECTRA for TensorFlow2 is a very useful tool in the pre-training of language representations, conferring enormous benefits by way of fast training times and high accuracy. It is further optimized for NVIDIA GPU architectures and hence shows its utility immensely in several NLP applications. It comes with full scripts and tools and thus is user-friendly; it also runs on compatible hardware. To sum it up, ELECTRA for TensorFlow2 is a very useful solution for anyone looking to enhance their NLP tasks.

ELECTRA for TensorFlow2 FAQs

-

What is ELECTRA w.r.t. NLP?

ELECTRA is a method of pre-training language representations that uses a generator-discriminator framework to efficiently identify correct and incorrect token replacements in the input sequences to improve the accuracy for NLP tasks. -

Why does NVIDIA’s version of ELECTRA help in training?

Optimized version of ELECTRA by NVIDIA is designed to run on Volta, Turing, and NVIDIA Ampere GPU architectures, utilizing their mixed precision and Tensor Core capabilities for accelerated training. -

How do you enable Automatic Mixed Precision in ELECTRA’s implementation?

To enable AMP, add the –amp flag to the training script in question. This turns on TensorFlow’s Automatic Mixed Precision, which uses half-precision floats to speed up computation but preserves important information with full precision weights. -

What is Mixed Precision Training?

Mixed precision training is a method of computation that puts together different numerical precisions, fast FP16 and FP32, to avoid loss of information in critical sections and speed up the train. -

What support is covered in NVIDIA’s ELECTRA for TensorFlow2?

All related scripts to download and preprocess data, multi-GPUs, and multi-node training are included. After that, it will involve utilities to pre-train and fine-tune using a Docker container, among many others.