DreamFusion: Revolutionizing 3D Modeling with Text-to-3D Synthesis

Discover the groundbreaking platform of DreamFusion, a collaborative effort from Google Research and UC Berkeley that combines text-to-3D synthesis with cutting-edge 2D diffusion technology. With DreamFusion, you can easily transform textual descriptions into vivid 3D models without the need for complex 3D denoising architectures or large-scale datasets.

Effortless Optimization of Neural Radiance Fields

DreamFusion utilizes a state-of-the-art 2D text-to-image diffusion model as a foundational prior, introducing an innovative loss based on probability density distillation. This approach allows for seamless optimization of Neural Radiance Fields (NeRF) through gradient descent, producing high-quality 3D renderings from different angles with low loss. The resulting 3D models offer viewability from any perspective, relightable textures, and seamless compositing into diverse 3D environments.

User-Friendly and Accessible

DreamFusion prioritizes ease of use and accessibility, requiring no 3D training data or adjustments to the existing image diffusion model. By harnessing the power of pre-trained image diffusion models as a robust prior, DreamFusion demonstrates the potential of these models beyond mere 2D applications.

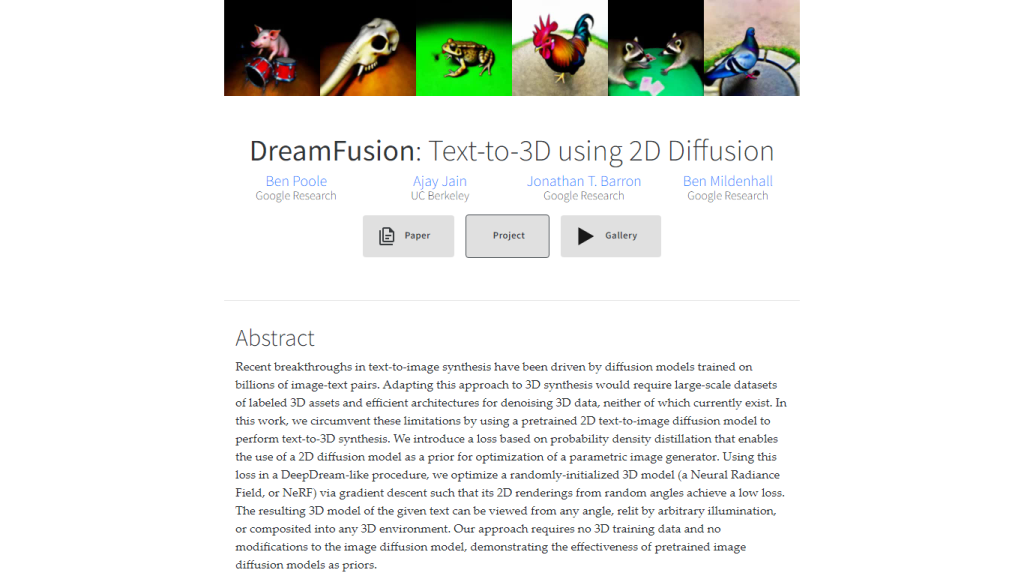

Explore DreamFusion’s Gallery and Create Your Own 3D Models

Visit DreamFusion’s gallery to witness the vast range of objects and scenes it can generate. With DreamFusion, you can take the exciting step of generating your very own 3D model from text today!

Real Use Case: DreamFusion can be used in various industries, such as architecture, gaming, and animation. Architects can use DreamFusion to generate 3D models of buildings from textual descriptions, while game developers and animators can easily create 3D characters and environments from written descriptions. DreamFusion simplifies the 3D modeling process, making it more accessible to professionals and hobbyists alike.