What is Hugging Face Transformers?

Hugging Face Transformers is a rather sophisticated, all-comprehensive machine learning library that avails state-of-the-art models for PyTorch, TensorFlow, and JAX in a simple interface. It is specifically designed for the highest standard of model implementations to make it easier for researchers and developers to work with state-of-the-art NLP models.

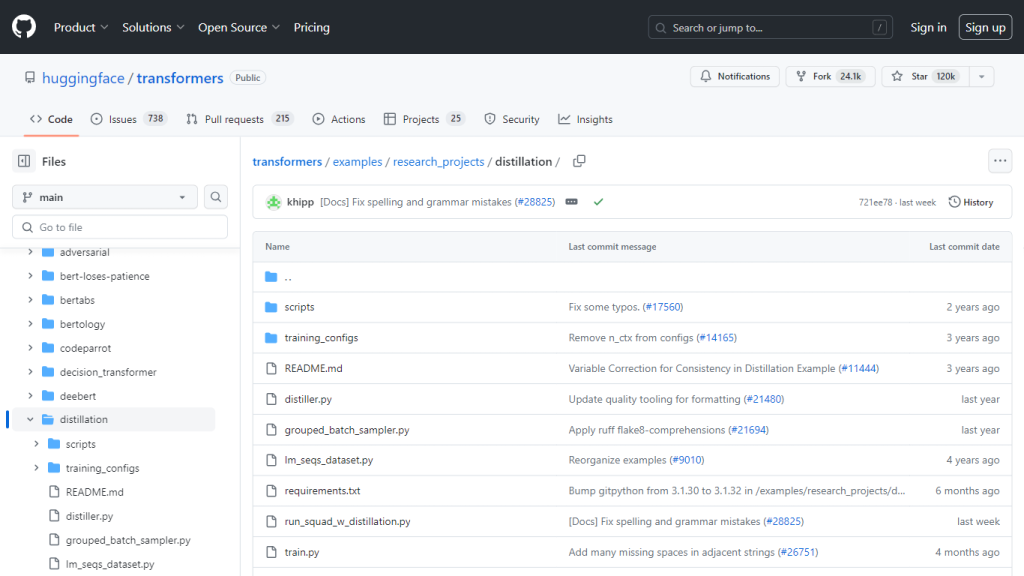

The development of Hugging Face Transformers has been led by the increasing demand to allow more accessibility and efficiency for sophisticated NLP models. Key facets of this ambition have been ‘distillation,’ a research project using knowledge distillation techniques to train smaller, faster versions of big, complex models without losing too much in terms of performance.

Key Features & Benefits: Hugging Face Transformers

-

Scripts and Configurations:

It consists of example scripts along with all relevant configurations to train distilled models like DistilBERT, DistilRoBERTa, and DistilGPT2. -

Updates and Bug Fixes:

All updates and bug fixes for improving performance and reliability are well documented. -

Detailed Documentation:

Every model is accompanied by detailed explanation and usage instructions, thus making it easy to implement. -

State-of-the-Art Models:

It provides access to high-performance models that have been optimized for speed as much as size to ensure efficient deployment. -

Multilingual Support:

Even models like DistilBERT support multiple languages, making them highly versatile for use cases around the world.

By using Hugging Face Transformers, users can harness efficient NLP models that are easy to deploy and maintain. The library’s focus on continuous improvement ensures that users have access to the latest advancements and fixes.

Use Cases and Applications of Hugging Face Transformers

Hugging Face Transformers can be applied to a variety of industries and sectors. For example, these models perform well in tasks such as text classification, sentiment analysis, machine translation, and question-answering systems. Examples include the following:

-

Customer Support:

Automate responses to customers for better customer service using NLP models. -

Healthcare:

Analyze medical records and support medical research through natural language understanding. -

Finance:

Improve fraud detection systems and support financial reporting automation.

These include case studies of successful implementations of Hugging Face Transformers for improving chatbot performance in customer service and developing advanced translation systems catering to multiple languages simultaneously.

How to Use Hugging Face Transformers

Usage of the Hugging Face Transformers is quite simple. Here’s how to go about it:

- Install the library using pip or conda.

- Select a Model: The next step will be to select an appropriate model from the Hugging Face model hub.

- Training: If necessary, train distilled models using provided scripts and configurations.

- Implementation: The model is integrated into an application with detailed documents and examples.

Thus, the best practice for the library and its models is to keep up to date; in the case of specific uses or debugging, refer to the heavy documentation.

How Hugging Face Transformers Works

Hugging Face Transformers utilises some of the state-of-the-art algorithms and models to effectively bring out the newest developments in NLP. At the heart of it are some core technical:

-

Knowledge Distillation:

Small models learn to perform tasks independently by attempting to imitate the outputs of larger and complex models. -

Transformers Architecture:

An architecture that uses attention mechanisms in an attempt to process a long range of dependencies found in textual data. -

Multilinguality:

Models, such as DistilBERT, were trained in 104 languages; hence it will perform well for any kind of linguistic task.

The workflow usually involves picking a pre-trained model, fine-tuning on specific data, and then deploying it for inference in several applications.

Hugging Face Transformers Pros and Cons

Pros:

- Whether high-performance and state-of-the-art models, regular updates, or community support—everything is present in this library.

- Thorough documentation along with examples for ease of use.

- Multilingual support for different applications.

Possible Drawbacks:

- These large models could be computationally too resource-intensive to train.

- Understanding and implementing their advanced features could be complex for a beginner.

Generally speaking, user feedback is quite positive; most praise the robustness of the library and how the Hugging Face Team is continuously working towards improving it.

Conclusion on Hugging Face Transformers

Hugging Face Transformers is one of the most powerfully rich tools within the domain of NLP; it is equipped with a collection of state-of-the-art models, complete documentation, and community support. It has been found to be versatile and efficient in many different applications, starting from customer support to healthcare. This library is bound to continue evolving, and time will bring further updates to improve and add more functionality.

Even better models and more languages and still more speed and performance optimizations may be future improvements.

Hugging Face Transformers FAQs

What is Distil* in Hugging Face Transformers?

A class of compressed models, much smaller, faster, light versions of their originals, keeping a lot of their performance while being more efficient.

What is Knowledge Distillation?

One of the methods is knowledge distillation, in which a smaller model—a student—is trained for any particular task as good as a larger model—its teacher—by being trained on the output of the latter.

Where can one get examples for distillation for DistilBERT, DistilRoBERTa, and DistilGPT2?

Examples for distillation can be found in the ‘distillation’ folder in the official GitHub repository for the transformers.

How frequently are the distilled models updated and maintained?

Also, this progress and the updates are shared regularly along with the resolution of any bugs identified in the logs of the updates to this repository.

How many languages does DistilBERT support?

DistilBERT supports 104 different languages, as listed in the repository provided, thus giving a wide array of applications for multilingual tasks.