What is Dolly-v2-12b?

Dolly-v2-12b is a state-of-the-art, 12-billion-parameter language model released by Databricks. The model has been specialized to offer instruction-following capabilities with high-quality output. The Pythia-12b model served as the basis on which the fine-tuning of Dolly-v2-12b was performed. This enables this model to leverage a wide span of capabilities, ranging from brainstorming and classification to summarization. This model has been optimized for commercial use, therefore very practical with many applications.

Key Features & Benefits of Dolly-v2-12b

Large Model Size:

Dolly-v2-12b has 12 billion parameters and is hence capable of generating complex and subtle text.

Better Instruction Following:

It is really good at following instructions, outperforming the model that it was trained from.

Fine-Tuned Dataset:

It leverages the databricks-dolly-15k dataset, whose host of diverse instructive interactions suggests a special improvement in its specification capacity.

Open Science Commitment:

Dolly-v2-12b was released under a permissive license-the democratization of AI.

Flexible Usage:

The model is available for many different GPU setups and is easily integratable with the Transformers library.

Use Cases and Applications of Dolly-v2-12b

There are a lot of use cases where one can utilize Dolly-v2-12b. For example, it can be utilized for the following:

-

Brainstorming:

To think of innovative ideas for projects or creating content. -

Classification:

It can also classify texts into their respective labels. -

Summarization:

Long articles or any documents can be summarized using this model.

A long list of industries where Dolly-v2-12b will come out as savior goes further. It will also help much in marketing, customer service, and even content creation. Its diverse usage makes it a versatile tool in every sphere.

How to Use Dolly-v2-12b

Using Dolly-v2-12b is pretty simple. Here’s how you can use Dolly-v2-12b:

- Have a machine with GPUs.

- Then you need to install the Hugging Face Transformers library.

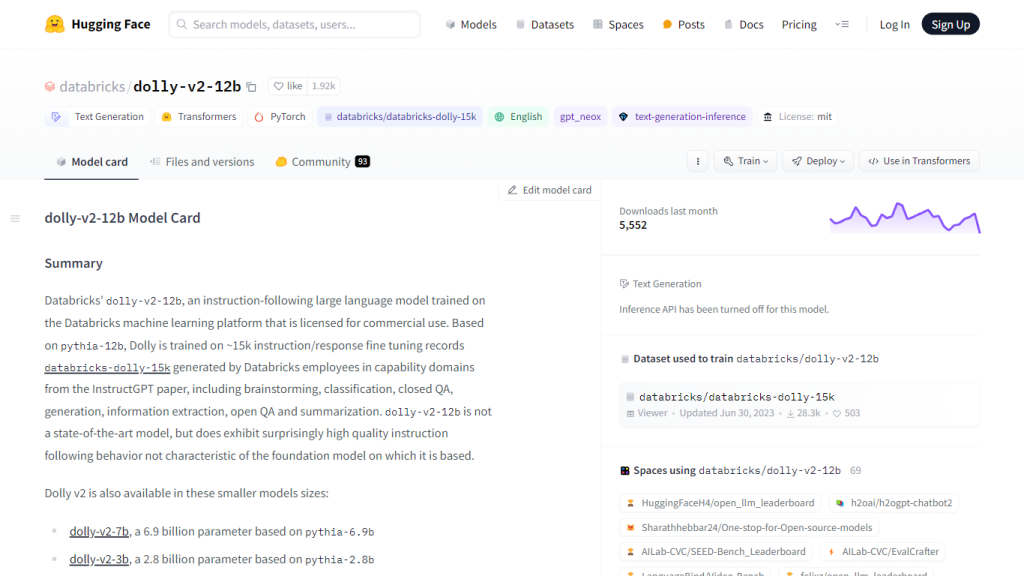

- After installation, access the Dolly-v2-12b model repository available on Hugging Face under Databricks, Inc.

- We recommend running the model on a high-performance GPU using the custom InstructionTextGenerationPipeline provided for you in the repository. It is important to point out that high-performance GPUs are suggested for the best usage of this model. Also, assure yourself of having the latest version of the Transformers library installed.

How Dolly-v2-12b Works

Dolly-v2-12b is an improved version developed based on the latest deep learning algorithms. It is developed based on Pythia-12b and fine-tuned on approximately 15,000 records from the Databricks-Dolly-15k dataset. These records enhance the model to have higher-quality output, improving its ability to follow instructions. This is made possible by integrating the model with the Transformers library; it’s ready for immediate deployment, easy to use, and fun.

Pros and Cons of Dolly-v2-12b

Pros:

- High quality in following instructions

- Highly complex text generation due to model size

- Flexible and easy integration into any existing system

Cons:

- Not state of the art.

- May struggle with handling complex prompts, especially in mathematics, and open-ended questions.

- User feedback indicates that this model is great to follow instructions but leaves much to be desired in more complex tasks.

Conclusion about Dolly-v2-12b

Another three interesting aspects of Dolly-v2-12b are its great instruction-following ability and flexibility. Though this may not be the most current AI model, it gives a workable and feasible method for the different uses required. It is still continuously improving and getting more user-friendly with each future update.

Dolly-v2-12b Frequently Asked Questions

What is Dolly-v2-12b?

Dolly-v2-12b is a 12 billion parameter language model based on EleutherAI’s Pythia-12b fine-tuned on ~15k records from Databricks.

How do I use Dolly-v2-12b?

The model can be used stand-alone, running on a machine with GPUs, from Transformers. There is a custom model repository with a custom InstructionTextGenerationPipeline.

Do Dolly-v2-12b have limitations?

Dolly-v2-12b isn’t state of the art. It has some specific limitations regarding more complex prompts, in particular mathematical operations or open-ended question answering.

Where is the Dolly-v2-12b model?

The Dolly-v2-12b model is available on Hugging Face under the owner Databricks, Inc.

Does Dolly-v2-12b have any smaller variants?

Smaller variants of this include a Dolly-v2-7b with 6.9 billion parameters and the Dolly-v2-3b with 2.8 billion parameters.