What is Chain of Thought Prompting?

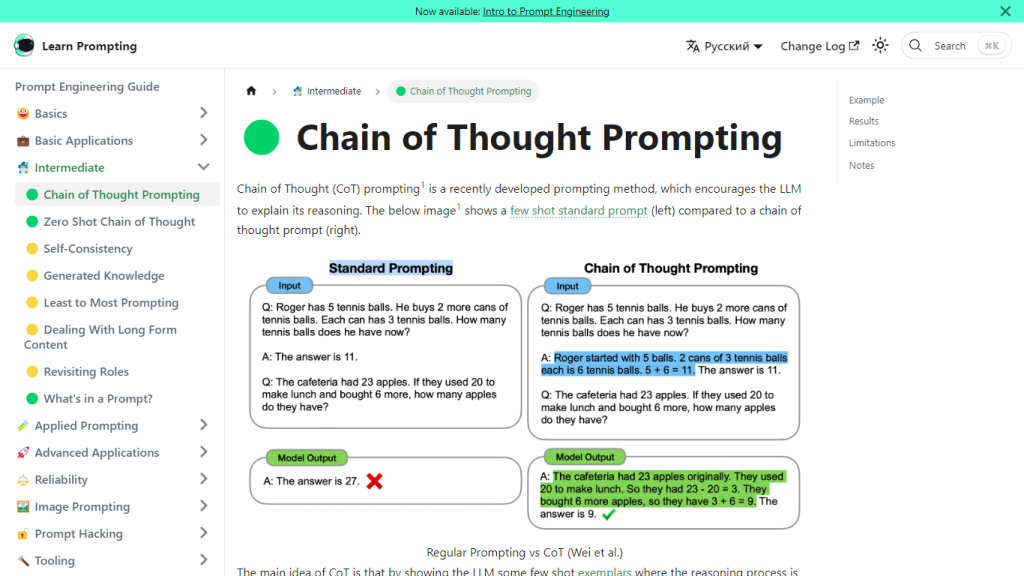

Chain of Thought Prompting is one of the complex techniques developed to interact better with Large Language Models. This forces such models to elaborate on their reasoning processes line by line and, hence, enhances accuracy in AI responses on tasks like arithmetic or common sense or symbolic reasoning. This method, as pointed out by Wei et al. in their paper, has huge potential, particularly when applied on the large models composed of approximately 100 billion parameters or more. However, smaller models may not benefit as much and can generate less logical outputs.

Key Features & Benefits of Chain of Thought Prompting

Configures Higher Accuracy: Chain of Thought Prompting yields more accurate outputs regarding AI tasks.

Explainability: It encourages LLMs to explain their reasoning.

Best Suited for Large Models: The greatest improvements in performance are seen with models that have approximately 100 billion parameters.

Comparison: Benchmarked results of the model are given, which include its performance on the GSM8K benchmark.

Examples: Chain of Thought Prompting is illustrated with models like GPT-3.

What is special about Chain of Thought Prompting is its ability to improve transparency and accuracy in large AI models whose output becomes reliable and understandable.

Use Cases and Applications of Chain of Thought Prompting

Chain of Thought Prompting will find major applications in situations that require elaborate reasoning. Some examples include the following:

-

Arithmetic Problem Solving:

Breaks down complex arithmetic operations into understandable steps that can be followed by AI models. -

Commonsense Understanding:

Strengthens the model in tasks that require common sense-based reasoning. -

Symbolic Reasoning:

It improves performance on tasks dealing with manipulation of symbols and structured data.

This shall particularly aid sectors like education, customer service, and research. For example, in education, it can give students step-by-step solutions to math problems for better understanding. In customer service, it can provide better responses by explaining the reasons for suggesting something or taking some action.

How to Use Chain of Thought Prompting

Chain of Thought Prompting requires the following steps:

- Use a very large language model with at least 100 billion parameters.

- Provide a few-shot examples where reasoning is clearly elaborated, and task the model with explication in steps.

Best practices also include making sure that examples are clear and detailed, thus allowing the model to learn from it the reason for this replication of the reasoning process. Knowing how to use the chosen AI platform in terms of UI and navigation itself will also enhance the effectiveness of this technique.

How Chain of Thought Prompting Works

Few-shot exemplars are at the heart of Chain of Thought Prompting, and this guides the model to break down its reasoning process. Essentially, it forces large-scale language models—at least as big as approximately 100 billion parameters—to be explicit about their thought process in coherent and step-by-step ways.

A typical workflow would be to present the model with examples that detail the reasoning explicitly, and the model uses this as a template to structure its response for the new queries. This indeed improves the coherence of the logic of the output from the model and is more accurate.

Pros and Cons of Chain of Thought Prompting

Pros

- Improved accuracy in many AI tasks.

- More transparency, able to see why the model came to a particular answer.

- Seems to work best with the large models, about 100B parameters.

Cons

- Not very effective with the smaller models that can be less than logical in their output.

- Requires examples to be detail-rich and clear to work effectively.

User feedback generally includes increased accuracy and transparency, although some users do point out the requirement of the large models for this approach to actually be effective.

Conclusion about Chain of Thought Prompting

Namely, chain-of-thought prompting is an excellent method that strongly improves the correctness and transparency of large AI models. It provides an enormous gain on tasks requiring logical coherence and a detailed understanding of the reasoning process of the model, particularly since it elicits detailed explanations.

This technique may further be refined in future developments and probably extend to small models and broader applications. For those interested, Chain of Thought Prompting is in a very promising area of exploration in AI and Prompt Engineering.

Chain of Thought Prompting FAQs

What is Chain of Thought Prompting?

This method is forcing AI models to explain their reasoning; it often results in more accurate AI tasks, like arithmetic and common sense.

With which models does Chain of Thought Prompting work best?

It works best with very large language models, about 100 billion parameters, as was the case with prompted PaLM 540B.

How does Chain of Thought Prompting work?

It elicits reasoning from the AI model in a step-by-step manner by using few-shot exemplars where the reasoning process is clearly explained.

Are there any limitations to Chain of Thought Prompting?

These would be less intelligent models that will, in turn, generate less logical chains of thought, hence poorer performance relative to the standard methods of prompting.

Are there any courses available on learning Prompt Engineering?

Yes, join Intro to Prompt Engineering and Advanced Prompt Engineering courses to know more about creating efficient prompts.