What is BIG-bench?

BIG-bench is an open collaborative benchmark project hosted on GitHub, and it stands for Beyond the Imitation Game Benchmark. The benchmark considers the evaluation of the current and future capabilities of language models. The project comprises over 200 different tasks touching various areas of language understanding and cognitive capacities. The benchmark represents a core tool by which researchers and developers can assess language models’ performance and extrapolate development trajectories.

BIG-bench is the first attempt to provide a single platform for the evaluation of language models based on a comprehensive and heterogeneous set of tasks. A preprint describing the benchmark and its evaluation on popular language models is available, including more information about how the benchmark works and the kinds of insights that it exposes.

Key Features & Benefits of BIG-bench

Some of the important features and benefits of BIG-bench include:

-

Inclusive Benchmarking Plane:

It holds a large collection of diversified tasks that turn out to be a good challenge to language models. -

Extensive Collection of Tasks:

It encompasses more than 200 tests that include most of the aspects of a language model. -

BIG-bench Lite Leaderboard:

Lightweight variant of the benchmark; it provides a standard measure for model performance and reduces evaluation cost. -

Open Source Contribution:

It allows contribution from and improvement by the community related to the benchmark suite. -

Good Documentations:

Good documentations for how tasks were created, how models are evaluated, and how a model participates in the benchmark.

Some key benefits of using BIG-bench are that this tool provides robust evaluation for language models, facilitates collaborative improvements to this method, and, with BIG-bench Lite, offers an inexpensive measure of model performance.

Use Cases and Applications of BIG-bench

BIG-bench can be used in several ways across different industries as follows:

-

Academic Research:

Researchers can use BIG-bench to test new hypotheses about language models and their capabilities. -

Technology Development:

Developers can utilize BIG-bench to benchmark and improve language models. -

Business Intelligence:

It can help businesses allow the usage of this benchmark in measuring the performance of language models within business applications such as customer support or data analysis.

It could be helpful to many different industries, from health and finance to education. The big-bench insights can be used, for instance, in health when testing the language models against the ability to correctly perceive and process medical records.

How to Use BIG-bench

BIG-bench has a few ways of using it, which will be described further:

-

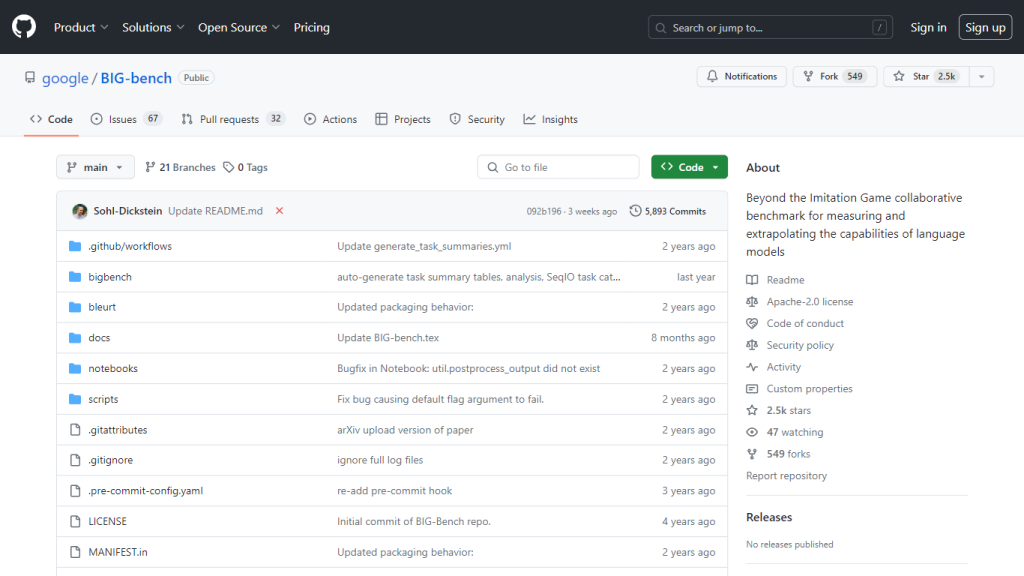

Access the Repository:

View the BIG-bench GitHub repository to access the available tasks and documentation. -

Choose Tasks:

Select over 200 tasks most closely aligning with your evaluation criteria. -

Run Evaluations:

Run evaluations on language models per detailed instructions. -

Contribute:

You can add new tasks, submit model evaluations, or improve the existing suite by contributing to the repository.

It also comes with thorough documentation that should be read and a community to keep up-to-date with improvements and discussions.

How BIG-bench Works

BIG-bench is based on a solid technical framework:

The benchmark is a very heterogeneous set of tasks to measure most of the cognitive abilities and several aspects of language understanding. The tasks are carefully selected and can be browsed by keyword or by the name of the task.

Underneath this lies advanced algorithms and models that are continuously tested and improved. This generally includes task creation, model evaluation, and performance analysis. Extensive documentation on the GitHub repository guides one through all the steps.

Pros and Cons of BIG-bench

BIG-bench has some advantages coupled with potential drawbacks as outlined below:

Advantages:

- Diverse task collection for a comprehensive evaluation

- The open source nature means that tasks can be contributed to by the community.

- Cost-effective evaluation via BIG-bench Lite

Possible Drawbacks:

- These tasks may be complex for people new to the field.

- Continuous updates require constant attention.

User reviews usually emphasize the benchmark’s wide applicability in language model estimation, even though a few of them underline that there is a steep learning curve involved.

Conclusion for BIG-bench

It is, therefore, a very useful tool for language model research and development. The huge collection of tasks, collaborative nature, and inexpensive evaluation options undoubtedly make it one of the unique tools available in this domain. This might be associated with a steep learning curve, though the benefits outweigh the drawbacks. In the future also, community contributions and updates shall make these features even better.

BIG-bench FAQs

What is BIG-bench?

BIG-bench: Benchmark Beyond the Imitation Game is a collaborative benchmark for measuring and extrapolating language models’ capabilities.

How many tasks does BIG-bench have?

BIG-bench has a suite of more than 200 tasks, all trying to benchmark many aspects of language models.

What is BIG-bench Lite?

BIG-bench Lite is a set of tasks within BIG-bench that canonicalizes model performance at a lower cost.

How can I contribute to BIG-bench?

Contributions, whether new tasks, model evaluations or improvements to the benchmark suite itself, are all highly valued and should be submitted via the GitHub repository.

How do I use the BIG-bench tasks and results?

The full set of tasks, along with all results is housed in the BIG-bench GitHub repository. This includes full instructions and leaderboards for.