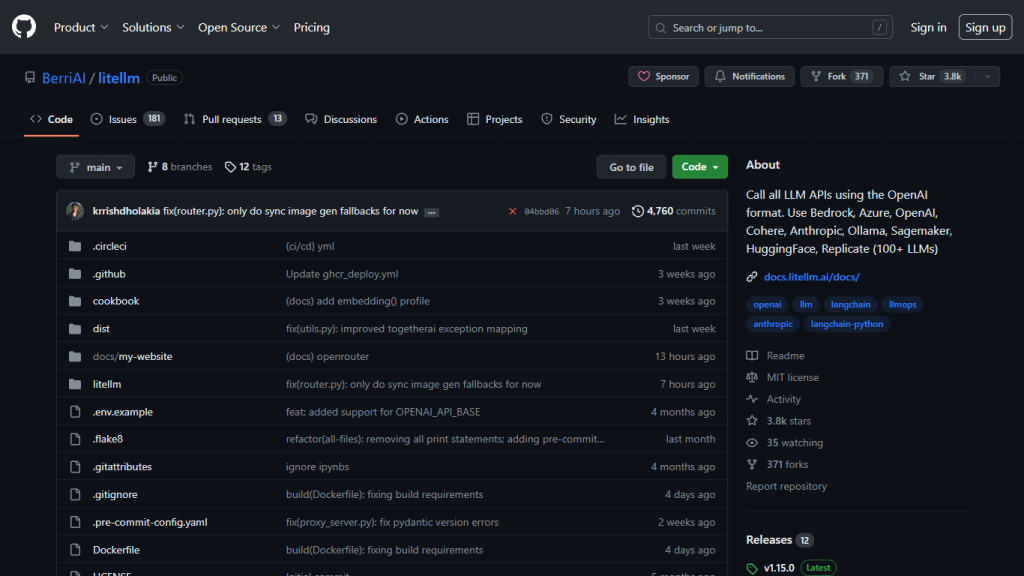

What is LiteLLM?

LiteLLM offers a universal solution for integrating various large language model (LLM) APIs into your applications using a consistent OpenAI format. This tool provides seamless access to multiple providers, such as Bedrock, Azure, OpenAI, Cohere, Anthropic, Ollama, Sagemaker, HuggingFace, and Replicate, among others. Developers no longer need to adapt to each provider’s specific API style, making LiteLLM a versatile and efficient tool for leveraging AI language models.

LiteLLM’s Key Features & Benefits

Detailed List of Features

- Consistent Output Format: Ensures uniform text responses from different providers.

- Exception Mapping: Maps common exceptions across providers to OpenAI exception types.

- Load Balancing: Handles over 1,000 requests per second across multiple deployments.

- Multiple Providers Support: Provides access to over 100 LLM providers using a single OpenAI format.

- High Efficiency: Translates inputs efficiently to providers’ endpoints for completions and embeddings.

Benefits of Using LiteLLM

LiteLLM streamlines the integration process of various LLM APIs, significantly reducing the complexity for developers. By offering consistent output formats and common exception mapping, it minimizes the learning curve and potential errors. The load balancing feature ensures high performance and reliability, even under heavy traffic. Additionally, its support for multiple providers means developers can choose the best model for their needs without worrying about compatibility issues.

LiteLLM’s Use Cases and Applications

Specific Examples of How LiteLLM Can Be Used

LiteLLM can be utilized in various scenarios, such as customer support automation, content generation, language translation, and data analysis. For instance, a customer service platform can integrate LiteLLM to provide consistent and accurate responses across multiple AI models, ensuring high-quality support.

Industries and Sectors That Can Benefit

Numerous industries can benefit from LiteLLM, including technology, healthcare, finance, education, and marketing. In the healthcare sector, for example, LiteLLM can help in generating patient reports and summarizing medical records. In education, it can assist in creating personalized learning materials and tutoring systems.

How to Use LiteLLM

Step-by-Step Guide on Usage

- Register for a LiteLLM account and obtain your API key.

- Integrate the LiteLLM API into your application following the provided documentation.

- Configure the API settings to select your preferred LLM providers.

- Send requests using the OpenAI-style API calls and receive consistent responses from multiple providers.

Tips and Best Practices

- Ensure your API key is securely stored and not exposed in your code.

- Regularly monitor your API usage to manage costs effectively.

- Test responses from different providers to identify the best fit for your application.

User Interface and Navigation

The LiteLLM platform offers an intuitive user interface, making it easy to navigate through various settings and configurations. Detailed documentation and user guides are available to assist in the setup and integration process.

How LiteLLM Works

Technical Overview of the Underlying Technology

LiteLLM operates by translating input requests into the specific formats required by different LLM providers. It ensures that the responses are returned in a consistent format, simplifying the integration process for developers. The platform supports high-volume requests through its load balancing feature, distributing traffic across multiple deployments.

Explanation of Algorithms and Models Used

While LiteLLM itself does not develop AI models, it acts as a bridge to various LLM providers. It leverages the latest algorithms and models from providers such as OpenAI, HuggingFace, and others, ensuring users have access to cutting-edge technology.

Workflow and Process Description

When a request is sent to LiteLLM, it is first translated into the appropriate format for the selected provider. The request is then routed to the provider’s endpoint, and the response is processed to ensure consistency before being returned to the user. This workflow ensures seamless integration and high performance.

LiteLLM Pros and Cons

Advantages of Using LiteLLM

- Streamlined integration process with multiple LLM providers.

- Consistent output formats reduce errors and improve efficiency.

- High performance with load balancing for large-scale applications.

- Flexibility to choose the best AI models for specific use cases.

Potential Drawbacks or Limitations

- Dependency on external LLM providers for model quality and performance.

- Potential costs associated with high-volume API usage.

User Feedback and Reviews

Users have praised LiteLLM for its ease of use and the ability to integrate multiple LLM providers without extensive modifications. However, some have noted the dependency on external providers as a potential drawback.

LiteLLM Pricing

Detailed Pricing Plans and Options

LiteLLM offers a freemium pricing model, allowing users to access basic features for free. For advanced features and higher usage limits, premium plans are available. Detailed pricing information can be found on the LiteLLM website.

Comparison with Competitors

Compared to other integration tools, LiteLLM stands out for its support of over 100 LLM providers and its consistent output format. This makes it a more versatile and user-friendly option for developers.

Value for Money Analysis

Given its extensive features and support for multiple providers, LiteLLM offers excellent value for money. The freemium model allows users to start with minimal investment, making it accessible for developers and businesses of all sizes.

Conclusion about LiteLLM

Summary of Key Points

LiteLLM is a powerful tool that simplifies the integration of various LLM APIs using a consistent OpenAI format. Its features, such as consistent output formats, exception mapping, and load balancing, make it an indispensable tool for developers looking to leverage AI language models.

Final Thoughts and Recommendations

For developers and businesses seeking a streamlined and efficient way to integrate multiple LLM providers, LiteLLM is highly recommended. Its ease of use, flexibility, and high performance make it a valuable asset in the AI development toolkit.

Future Developments and Updates

LiteLLM is continuously evolving, with plans to support additional LLM providers and introduce new features to enhance user experience. Keeping an eye on updates and new releases will ensure users can take full advantage of the platform’s capabilities.

LiteLLM FAQs

Commonly Asked Questions

- Is LiteLLM free to use?

- LiteLLM offers a freemium model, allowing users to access basic features for free. Premium plans are available for advanced features and higher usage limits.

- How do I integrate LiteLLM into my application?

- Integration is straightforward. Register for an account, obtain your API key, and follow the provided documentation to set up the API in your application.

- Which LLM providers does LiteLLM support?

- LiteLLM supports over 100 LLM providers, including Bedrock, Azure, OpenAI, Cohere, Anthropic, Ollama, Sagemaker, HuggingFace, and Replicate.

Detailed Answers and Explanations

For detailed integration guides and troubleshooting tips, refer to the LiteLLM documentation. The platform provides extensive resources to help users get started and make the most of its features.

Troubleshooting Tips

- Ensure your API key is correctly entered and has the necessary permissions.

- Check the provider’s status to ensure there are no outages or maintenance periods.

- Review the API documentation for specific error codes and their resolutions.