What is ALBERT?

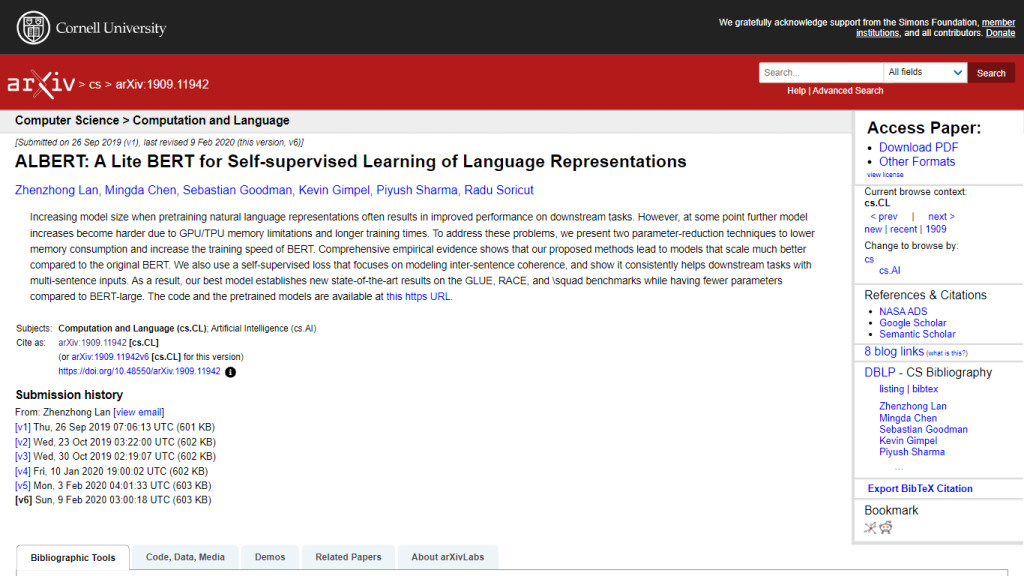

ALBERT, its name derived from “A Lite BERT”, is a revision of the widely used BERT model proposed to perform very well on natural language processing. Zhenzhong Lan et al. came up with this in an arXiv preprint, presenting ALBERT as a technique that applied two methods for reducing parameters. This greatly reduces the memory consumption and speeds up the training of BERT while giving desired performance.

This is an innovation that tackles two challenges: the GPU/TPU memory limitation and the usually very long training time related to bigger model sizes. The empirical results in the paper proved that ALBERT outperformed BERT on various benchmark tests like GLUE, RACE, and SQuAD, with state-of-the-art results but with fewer parameters. It also proposes a novel self-supervised loss function that improves the understanding of inter-sentence coherence in the model and hence achieves significant improvements on tasks requiring multi-sentence inputs. The code and all the pre-trained models for ALBERT have been made publicly available, thereby motivating its wide spread in the NLP community.

Key Features & Benefits of ALBERT

Top Features

- Parameter-Reduction Techniques: Techniques that reduce memory consumption and increase BERT’s training speed.

- Better Scaling: ALBERT scales much better than the original BERT, even with significantly fewer parameters.

- State-of-the-Art Results: New state-of-the-art results on GLUE, RACE, and SQuAD benchmarks.

- Novel Self-Supervised Loss: A new loss function that encourages better inter-sentence coherence modeling.

- Open Source Models: Pre-trained models and codebase is open-source under Apache license, available for community use.

Benefits

- Reduced Memory Footprint: This allows better hardware resource utilization.

- Faster Training: Reduces the training time of the model.

- Better Performance: The score for various benchmarks is the highest, while the number of parameters is lower.

- Better Coherence Among Sentences: Better comprehension and text processing containing more than one sentence.

How to Use ALBERT and Applications

It works especially well for a variety of natural language processing tasks, such as modeling languages, classifying texts, and answering questions. Its ability to handle multi-sentence inputs with improved coherence makes it suitable for applications that require deep understanding of context, such as the following:

- Customer Support: Automate and improve the processes of customer service.

- Content Moderation: Find and manage inappropriate content.

- Sentiment Analysis: Analyze customer feedback and sentiment in real time.

- Document Summarization: It summarizes long documents without the loss of any important information.

Finance, Healthcare, and E-commerce can be taken to whole new levels in text processing with much greater efficiency and accuracy using ALBERT.

Getting Started with ALBERT

How to Run

- First, you download from the ALBERT GitHub repository by following the code along with the pre-trained model.

- Second, install dependencies based on the overall documentation of the repository.

- Load the pre-trained ALBERT model into your environment.

- Train the model on your dataset for a particular task.

- Deploy the Model into Your Application or service.

Tips and Best Practices

- Utilize the Pretrained Models to save your time and computational resources.

- Fine-tune the model on the task-specific dataset to get the best results.

- Monitor model performance and adjust hyperparameters as needed.

How ALBERT Works

ALBERT introduced advanced techniques of parameter reduction that made BERT more efficient. These techniques include:

- Factorized Embedding Parameterization: It reduces the embedding matrix by factorizing it into two small matrices.

- Cross-layer parameter sharing: Layer sharing reduces the overall number of parameters.

It also proposes a new self-supervised loss function focused on improving inter-sentence coherence, crucial in many tasks involving multi-sentence inputs. The workflow goes in the way of pre-training the model on the large corpus of text data first, followed by fine-tuning with specific tasks to achieve state-of-the-art results.

Pros and Cons of ALBERT

Advantages

- Less usage of memory and faster training.

- High benchmark performance with fewer parameters.

- Better handling of multi-sentence inputs due to the self-supervised loss function.

- Publicly available code and pre-trained models are available, thus easy to adopt.

Possible Downsides

- It might need fine-tuning for specific tasks for ideal performance.

- The initial setup and installation of dependencies are cumbersome for a beginner.

Conclusion about ALBERT

ALBERT represents a key development in the area of natural language processing because it comes with more efficiency and scalability compared to BERT. This makes it very useful for a variety of applications because it uses less memory, trains more quickly, and shows state-of-the-art performance in many benchmarks. With the availability of pre-trained models and open-source codes, accessibility and usability have been further improved. While innovation within the NLP community continues to evolve, ALBERT will definitely be one of the bedrock foundations for even more advanced and powerful language models.

Common Questions about ALBERT

What is ALBERT?

ALBERT is a new, optimized version of BERT for self-supervised learning of language representations at lower parameters, efficiently learning.

What is the major advantage that ALBERT has compared to the original BERT?

It has reduced memory consumption, it is significantly faster to train, much better scaling, and state-of-the-art results on benchmarks, achieving that with far fewer parameters.

Does ALBERT handle multi-string input tasks?

Yes, ALBERT includes a self-supervised loss function that focuses on inter-sentence coherence that helps improve performance on multi-sentence input tasks.

Where do I get the code and pre-trained models for ALBERT?

Code and pre-trained models of ALBERT can be found in the GitHub repository provided.

What kind of tasks will benefit from ALBERT?

The tasks involving natural language understanding and natural language processing, such as language modeling, text classification, question-answering, benefit using ALBERT.