What is lm-evaluation?

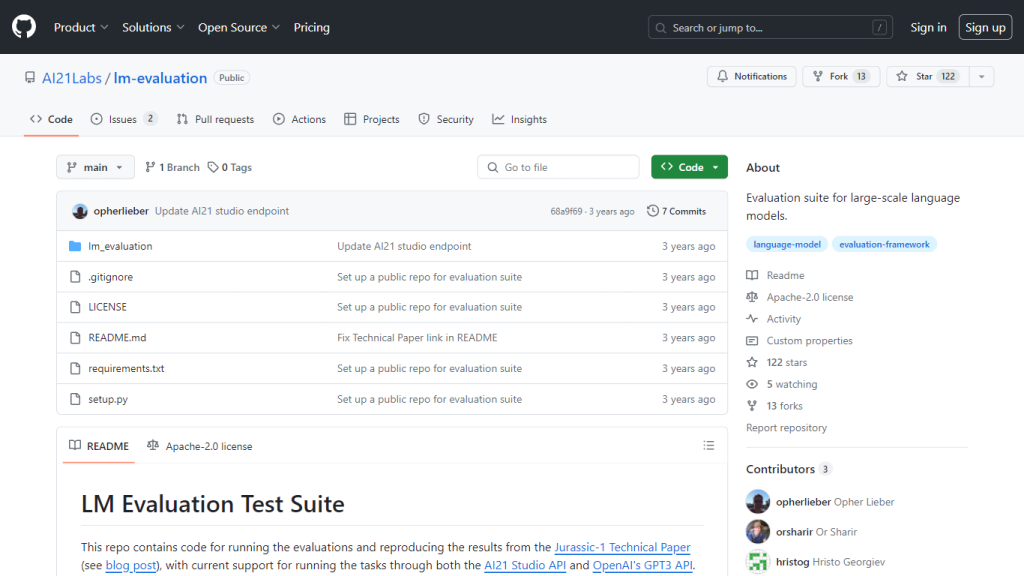

The AI21Labs’ LMEvaluation is an expansive suite of tests that are used in the performance check of large-scale language models. This is a powerful tool, quite important to developers and research specialists who would like to understand how well the improvements in the language model’s capacity are or are not going. It will allow for the execution of various kinds of tests, offering integration with both AI21 Studio API and OpenAI’s GPT3 API.

It’s an open-source project; the community, through its GitHub repository, is in heavy collaboration, inviting contributions from users. It’s easy to set up and has flexibility in testing models against various tasks, including multiple-choice and document probability tasks detailed in the Jurassic-1 Technical Paper.

lm-evaluation Key Features & Benefits

Diverse Evaluation: lm-evaluation currently supports multiple-choice and document probability tasks to ensure a holistic evaluation for language models.

Multiple Providers: It works with both the AI21 Studio API and OpenAI’s GPT3 API. Its applications are across.

Open Source: The project is open to contributions, as the community on GitHub contributes.

Detailed Documentation: Instructions on how to install the library and use it are clear and well-detailed, hence making it available for users from a wide spectrum.

Accessibility: Licensing and repository insights are included for improving project understanding and openness.

Use Cases and Applications of lm-evaluation

The uses that can be made with lm-evaluation are very many, hence versatile in usage across various sectors. These include:

- Academic Research: Researchers will then benchmark and continually work on improving language models, furthering the academic work in NLP.

- Industrial Applications: Companies can utilize lm-evaluation for checking the robustness of their language models to achieve high performance in practical applications.

- Education: This suite can also be integrated into academic curricula where the students will have practical experience in the evaluation of language models.

Getting Started with lm-evaluation

Using lm-evaluation is pretty straightforward. Following are the step-by-step processes required for getting started:

- Clone the Repository: Start with the cloning of the repository for lm-evaluation from GitHub.

- Create the Directory: Change into the lm-evaluation directory, and then create it on your local machine.

- Install Dependencies: Use pip to install any necessary dependencies.

- Run Tests: Run the suite to execute desired evaluations using either AI21 Studio API or OpenAI’s GPT3 API.

- Best Practice: Dependence should use the latest available version. Please find a detailed instruction listed in the documentation.

How lm-evaluation Works

The exercising of language models by lm-evaluation is done through various sophisticated algorithms and models. Setup of the environment, selection of appropriate tasks, then run the evaluations, inclusive, are the workflow components of the suite. These results will give insight into performance metrics by the developers to work on further improvements.

Pros and Cons of lm-evaluation

Pros:

- Evaluation tasks differ: it supports a lot, therefore giving a full view of the capabilities of the language model.

- Cross-platform: works both on AI21 Studio API and OpenAI’s GPT3 API.

- Community collaboration: open-source, hence contributions and sharing of knowledge are invited.

Cons:

- Inconvenience of setup: although its setup is quite easy, people with little experience in GitHub or even pip might get some time getting up and running.

User Feedback

Generally, users enjoy this suite’s feature completeness and community-oriented feel, but most would like to see easier setup documentation.

Conclusion about lm-evaluation

Lm-evaluation is a very useful utility for people working either on language model development or research in general. Extensive sets of tests, cross-platform, and community-driven philosophy are definite pros. Although setup may be tricky for a few users initially, this well-documented software with active community support extends its reach to a wide audience. As the tool gets further updated with contributions, there it will no doubt establish itself as the go-to utility for the purpose of language model evaluation.

lm-evaluation FAQs

What is lm-evaluation?

lm-evaluation is a suite developed to measure the performance of large language models.

How can I contribute to the lm-evaluation project?

You can contribute by creating an account on Github and joining this project’s development.

Which providers APIs does lm-evaluation support?

lm-evaluation currently supports tasks via API of AI21 Studio and OpenAI’s GPT3 API.

How to set up lm-evaluation?

Clone the repository, change into the lm-evaluation directory and install the dependencies using pip.

Licence

lm-evaluation is licensed under the Apache-2.0 license making it free to use and distribute.