What is Chinchilla?

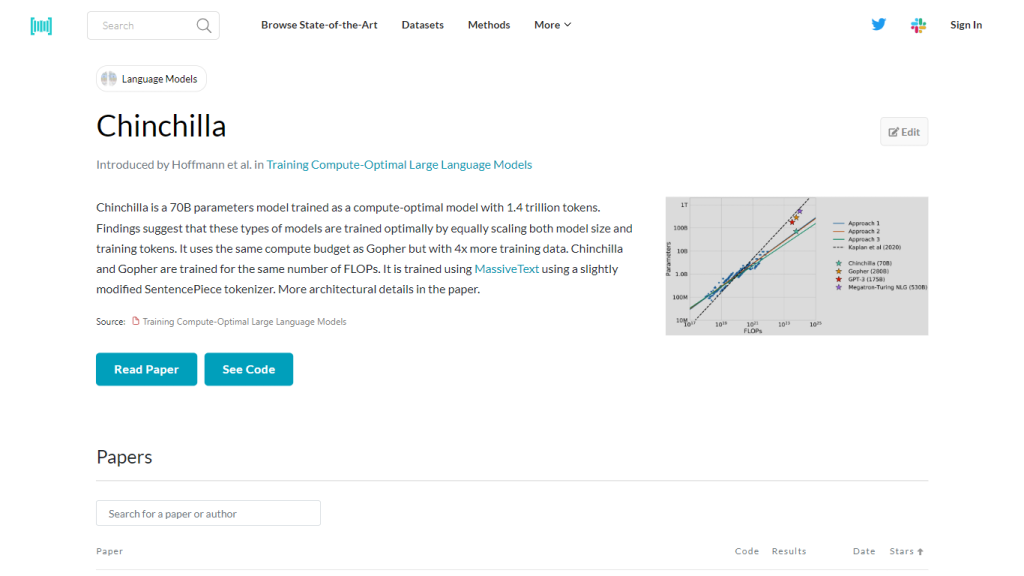

Chinchilla is a 70B-parameter, largescale AI model. It is carefully designed to have a good balance between model size and volume of training data so that both efficiently learn from each other. On a staggering 1.4 trillion tokens, Chinchilla is a model and data scaling effort proportional to each other; this approach was borrowed from research suggesting that the best training occurs when model size and training tokens grow together.

Chinchilla shares its computational power with another model called Gopher but is differentiated by four times more training data. Both models remain conceived to run on the same amount of FLOPs, thus keeping the consumption of compute resources at par. Chinchilla is trained on the enormous dataset MassiveText and uses an adaptation of the SentencePiece tokenizer for understanding and processing data.

Key Features & Benefits

Chinchilla Feature List

- Compute-Optimal Training: A 70 billion parameter model trained for the optimal scaling of model size and training data.

- Extensive Training Data: 1.4 trillion tokens, so more or less a very rich and full dataset for deep learning.

- Balanced Compute Resources: This provides Gopher’s compute budget while giving four times more training data.

- Same resource allocation as Gopher: Keeps training at the same number of FLOPs.

- It uses MassiveText; training with a lightly modified SentencePiece tokenizer on the MassiveText dataset makes available a large corpus for model learning.

Benefits of Using Chinchilla

Balanced model size and scale of training data, enriched training dataset, and well-execution of computational resources make Chinchilla provide optimized learning efficiency. This will make the tool very powerful to work on different AI applications and deliver robust performance with scalability.

Chinchilla Use Cases and Applications

The robust architecture and intensive training data at Chinchilla enable its application in many scenarios. This is of benefit in the following areas:

-

NLP:

Development of language models for translation, sentiment analysis, and text generation. -

Data Analysis:

Accurate predictions in sectors like finance and health from prediction models. -

Research and Development:

In the development of new AI technologies and methodologies.

From healthcare to financial services, high technology, Chinchilla’s rich functionality caters to industries. Case studies and success stories of its early adopters show its potential to improve efficiency and outcome significantly in a host of applications.

How to Use Chinchilla

Step-by-Step Usage Guide

- Access the Platform: Log onto the Chinchilla platform via the website or associate API.

- Set up your environment to integrate Chinchilla and make it compatible with other existing systems.

- Prepare to provide data for processing in Chinchilla.

- Run the model against data to see insights from the analysis.

- Review results returned by Chinchilla and tweak for best results where necessary.

Tips and Best Practices

- Clean and set up your data properly for maximum accuracy in the analysis by Chinchilla.

- Keep your environment and tools updated for compatibility and performance.

- Big documentation and support resources are used when troubleshooting and optimization is required.

How Chinchilla Works

Chinchilla is based on a sophisticated architecture that ensures a good tradeoff between model size and the amount of training data. This model uses a SentencePiece tokenizer modified to ingest data from the MassiveText dataset, whose huge and diverse corpus it learns.

The training process is designed to use as many FLOPs as utilized by the Gopher model. At this point, Chinchilla, with this balanced approach, comes up with a superb performance without any excess in computational demand.

Pros and Cons of Chinchilla

Pros

- The learning is optimized due to the balanced size of the model with respect to the training dataset.

- It is trained on a very rich and diverse dataset.

- Optimal utilisation of computational resources is done.

Cons

- Setting up and training Chinchilla initially requires a huge amount of computation.

- Dependency on extensive datasets for optimal performance.

User Feedback and Reviews

The users have, in general, praised Chinchilla for its high performance and speed. A few of them, however, have stated that the environment setup is difficult at the beginning, and huge computational resources are required.

Conclusion about Chinchilla

Chinchilla is a very powerful AI model, intended to optimize the relationship between the size of a model and the amount of training data. It has been trained on rich datasets and used computational resources efficiently, which turns this engine into an asset in applied applications. Although Chinchilla may be overwhelming to set up initially, these pros weigh much more than the cons, making it highly recommended to any user in search of advanced AI capabilities.

That means future developments and updates can only work to improve performance and usability, keeping the technology at the very forefront of AI.

Chinchilla FAQs

What is Chinchilla when speaking about AI models?

Chinchilla is a 70 billion parameters AI model, explicitly developed for optimizing the relationship between the size of a model and training data, that has been trained on 1.4 trillion tokens.

How does Chinchilla differ from AI model Gopher?

Chinchilla had the same compute budget as Gopher but used four times more training data to achieve optimal learning.

How many FLOPs are there in Chinchilla and Gopher?

Chinchilla and Gopher were trained for a similar amount of FLOPs, which is a measure of how many floating-point operations per second the models were trained on.

What is MassiveText and SentencePiece tokenizer used for in training Chinchilla?

Chinchilla was trained on the MassiveText dataset, using a modified version of the SentencePiece tokenizer for such training data interpretation.

Is there a research paper for details on the Chinchilla model?

Yes, more details of the architecture and further insights regarding the training and design of the Chinchilla model are available in this associated research paper.