What is ELMo?

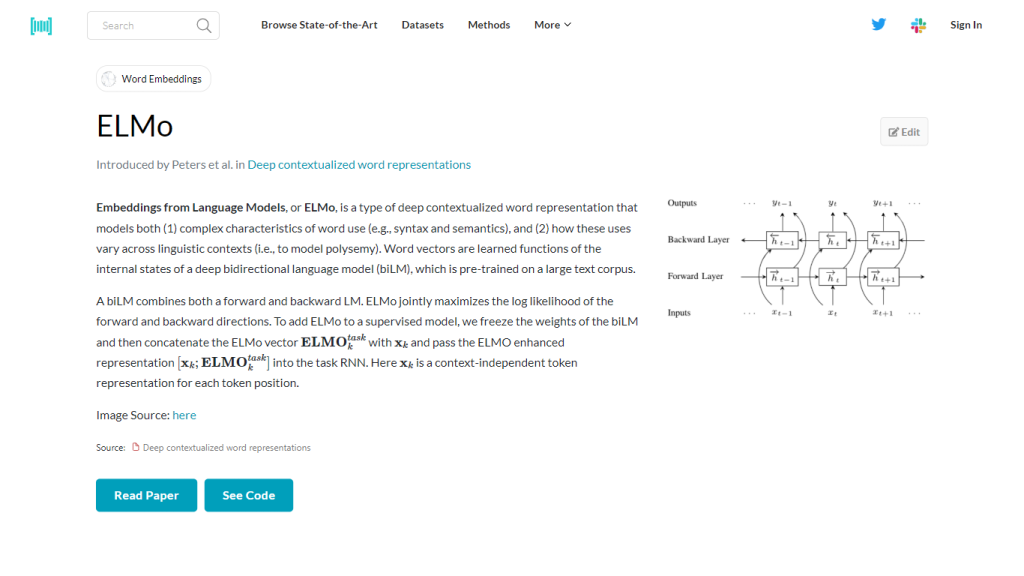

ELMo is a breakthrough Language Representation model that could help a machine understand complex properties of word usage. It does this by capturing a deep understanding of syntax and semantics, and word usage, which varies across different linguistic contexts. ELMo does this with word vectors that are functions of the internal states of a pre-trained deep bi-directional language model (biLM). This biLM uniquely models both forward and backward language model likelihoods. When integrating ELMo into a task-specific model, the biLM weights remain frozen, while the ELMo vector is concatenated with the baseline token representation and fed into a task RNN, therefore enhancing the model’s performance with rich context-aware word embeddings.

Key Features & Benefits of ELMo

Deep Contextualized Word Representations:

ELMo supplies word vectors that are deeply sensitive to the touch and subtlety of word usage.

Pre-trained Bidirectional Language Model:

Sends internal states of a biLM, pre-trained on substantial text corpora, for better embeddings.

Improvement of Task-specific Models:

The ELMo vectors can be infused into current models for better performance with the incorporation of context.

Modeling of Polysemy:

It identifies and uses the different meanings for a word, given its linguistic context.

Syntax and Semantics Modeling:

It learns complicated features of language use, like sentence structure and meaning.

ELMo’s Use Cases and Applications

Some of the places where ELMo can be used include any application that requires subtle understanding of languages. Specific examples of applications could be the following:

-

Sentiment Analysis:

Pre-trained ELMo contextualized word embeddings hold a great promise for significantly improving a host of sentiment analysis models through effectively grasping the conveyed sentiment of a sentence. -

Named Entity Recognition:

ELMo enhances precision in the identification and classification of entities with its context-sensitive word vectors. -

Machine Translation:

ELMo will improve translation quality since it gains a better understanding of the context and meaning of words in diverse languages. -

Question Answering Systems:

ELMo improves the models for correct answering of user questions based on understanding the context of the questions.

In areas such as finance, health care, and customer care, this heightened sense of language understanding could be usefully exploited by ELMo to speed up and make more accurate text data processing.

How to Use ELMo

Using ELMo requires a few basic steps:

- Pre-trained biLM for your model. Remember to freeze the weights of the biLM.

- Concatenate the ELMo vector with the baseline token representation.

- This enriched representation is fed through the task-specific RNN.

- It is a good strategy to fine-tune the task model on your data with the pre-trained contextual embeddings by keeping the biLM weights frozen.

How ELMo Works

ELMo relies on a deep bidirectional language model (biLM). This model is trained on text sequences in both forward and backward directions simultaneously. The biLM compared to unidirectional models captures a richer, more complex understanding of the structure of languages. The word vectors being generated are functions of internal states of this biLM, pre-trained on a large corpus of text; hence, they are highly accurate embeddings. The ELMo method provides the contextual information that forms a significant boost to model performance by freezing the biLM weights and integrating the ELMo vectors with the task-specific representations.

Advantages and Disadvantages of ELMo

Following are the advantages of ELMo:

-

Deep Contextual Understanding:

It gives deep, context-aware word embeddings. -

Better Performance of Model:

Integrating the ELMo into task-specific models generally improves the performance. -

Versatility:

It can be applied to a range of NLP tasks from the simplest-like sentiment analysis-to the most complicated one, like machine translation.

Limitations include the following:

-

Deep Bidirectional Model:

These are computationally expensive both to train and to make predictions. Integrating ELMo into systems is non-trivial and requires deep expertise in NLP and machine learning.

Conclusion about ELMo

To sum everything up, ELMo is a mighty utility that helps in improving the different aspects of natural language processing by using deep contextualized word embeddings. ELMo provides an NLM pre-trained in a deep bidirectional way, rich in words aware of context, with significant benefits to task-specific models. Although computationally expensive and somewhat more cumbersome to integrate into downstream models, the advantages derived from using ELMo especially within complex structures in language and polysemy makes it one of the assets for any downstream NLP applications. Further capabilities and ease of use are still expected with on-going development and updates.

ELMo Frequently Asked Questions

What is ELMo?

ELMo stands for Embeddings from Language Models. It is a type of language representation model that provides deep contextualized word representations.

What does ELMo model?

The ELMo models both the complexity of word use and how the usage of words changes across different linguistic contexts, otherwise called polysemy.

How does ELMo generate word vectors?

ELMo uses word vectors that are functions of the internal states of a deep bidirectional language model, which is pre-trained on a large text corpus.

How would you incorporate ELMo into a supervised model?

For incorporating ELMo into a task-specific model, freeze the weights from the pre-trained biLM, concatenate the ELMo vector with the token representation, and feed this enriched representation to the task RNN.

What is a deep bidirectional language model (biLM)?

A deep bidirectional language model, or biLM, trains a model on both forward and backward text sequences at the same time, which gives a much richer and more complicated understanding of language structure.