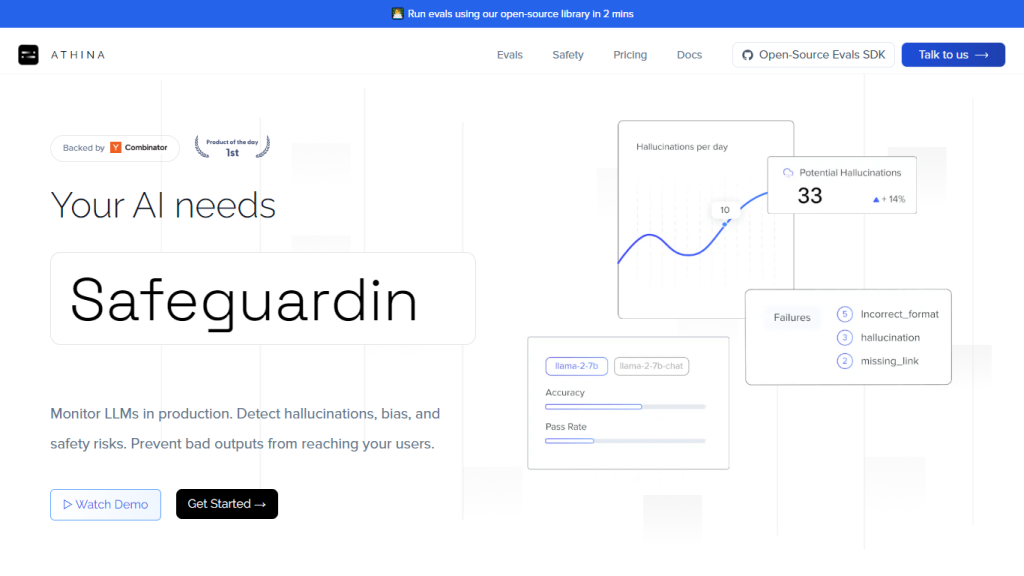

What is Athina AI?

Athina AI is an utterly important tool developed for companies that are deploying large language models into production. It provides the user with the ability to run evaluations within minutes using an open-source library. It aids in monitoring LLMs against various problems, such as hallucination, bias, risks, and importantly ensuring that outputs produced are of a very high quality to be passed on to the end-user. It also features a vast range of evaluation metrics, giving all-rounded tools for monitoring, debugging, analysis, and improvement of LLM pipelines. Athina’s enterprise-grade platform is fully controlled by the privacy settings and concurrently supports other user teams working on historical analytics.

Key Features & Benefits of Athina AI

-

Run Evaluations w/ Open-Source Library:

Easily run evaluations with an open-source library and minimal code. -

Detect Hallucinations, Bias, and Safety Risks:

Detect not only hallucinations, bias, and safety risks in LLM outputs but also mitigate them. -

Extended Set of Evaluation Metrics:

More than 40 preset evaluation metrics provided, supporting custom evaluations. -

Monitor, Debug, Analyze and Improve:

Comprehensive tools to enhance LLM pipelines. -

Full Control Over Privacy:

Self-hosted means all the evaluations remain contained within a user’s infrastructure. -

Multi-User Collaboration:

With Apache Superset, more than one team can work interchangeably on historical analytics without interrupting the workflow. -

GraphQL API:

Programmatic access of logs and evaluations, increasing integration and workflow automation. -

LLM Agnostic:

Works with any large language model, whether pre-trained or fine-tuned to specific needs.

Use Cases and Applications of Athina AI

Athina AI is versatile and can be used in the following cases:

-

Performance Evaluation:

A way to quickly gauge how an LLM is performing in the production environment with the help of the evaluation metric information. -

Risk Detection:

Continuous monitoring and analysis of LLM pipelines for bias and hallucinations ensure the AI outputs are safe and reliable. -

Collaborative Analytics:

Securely collaborate with teams on historical analytics with the use of an enterprise-graded platform by Athina to better supervise language models.

Athina AI is for a multitude of professions, ranging from machine learning engineers and data scientists to AI developers, AI ethicists, and product managers.

How to Use Athina AI

Using Athina AI is a breeze. The guide below describes the usage in 4 steps:

-

Integration:

Simple Athina integration in just a few lines of code in your working environment. -

Set Up Evaluation:

Easy to set up, desired, and customizable evaluation with tens of presets. -

Run Evaluations:

Run the evaluation while keeping track of the performance of your LLM. -

Analyze Results:

Debug, analyze, and have your language model improved using the tools on the platform. -

Collaborate:

Use multi-user support to collaborate on analytics and improve model supervision.

The best way to do this is by continuously updating evaluation metrics with the changing requirements of your model, along with the use of the powerful GraphQL API to integrate and automate the entire workflow.

How Athina AI Works

Athina AI is very easy to use and requires very little setup to integrate into your existing LLM environment. It uses an extremely powerful suite of algorithms and models for the task of monitoring and evaluating the output of LLM. The platform enables continuous runtime monitoring of models to detect anomalies in real time. The workflow of Athina consists of:

-

Integration:

Integrate the Athina open-source library into your existing LLM pipeline. -

Configuration:

Define the evaluation metrics and the parameters to be monitored. -

Execution:

Perform evaluations to collect the information on the performance of LLM. -

Analysis:

Analyze results with Athina’s tools to pinpoint improvement spots.

Pros and Cons of Athina AI

Pros

- Full Evaluation Metrics: 40+ preset metrics and real custom evaluations.

- Privacy: Self-hosted solution for complete data privacy.

- Multi-User: Support for multiple users to achieve joint output from different teams.

- LLM Support: Any large language model can be worked out, whether it is a custom version of it.

Cons

- Setup time: Sometimes required initial setup/configuration time.

- Some Features: Some of the advanced features may be locked in paid plans.

Conclusion of Athina AI

In sum, Athina AI offers to you a powerful platform through which you are able to track and assess the performance of large language models in production environments. With more than 40 evaluation metrics, complete control of privacy, and multi-user support, Athina is an early warning system that cannot be done without in order to establish the dependability and safety of AI outputs. Some upfront setup may be necessary, but using Athina pays off boundlessly in the long run. Future developments and updates are likely to further enhance its capabilities, making it a valuable asset for any team working with LLMs.

Athina AI FAQs

What is Athina AI?

Athina AI is a platform that makes sure that the Large Language Model in production meets its liability and safety.

What can I do with Athina AI?

With Athina AI, one can ensure there are no biases, hallucinations, or other risks caused by LLMs, run over 40 diverse sets of metrics workouts, and easily manage all the prompts.

Does Athina AI work with any team size?

The Athina AI text monitoring platform works with all team sizes. There is a no-cost Starter plan for smaller teams and customized solutions for enterprise use.

Does Athina AI work with any Large Language Model?

It is a platform-agnostic LLM, so the platform is agnostic and can work with any LLM on the market. There is no limit on which LLM the application can be used with.

How does Athina AI ensure there is no breach of data privacy and data control?

Athina AI is a self-hosted solution; hence, all evaluations are done on the infrastructure of the user, ensuring full control and privacy.