What is DeBERTa?

DeBERTa refers to Decoding-enhanced BERT with Disentangled Attention. It is a more advanced model of natural language processing developed by Microsoft. It improves on the BERT architecture by using a new mechanism in the attention process, which is able to disentangle the content from the positional information in the processing for sequence data rehabilitation, providing better performance on text application.

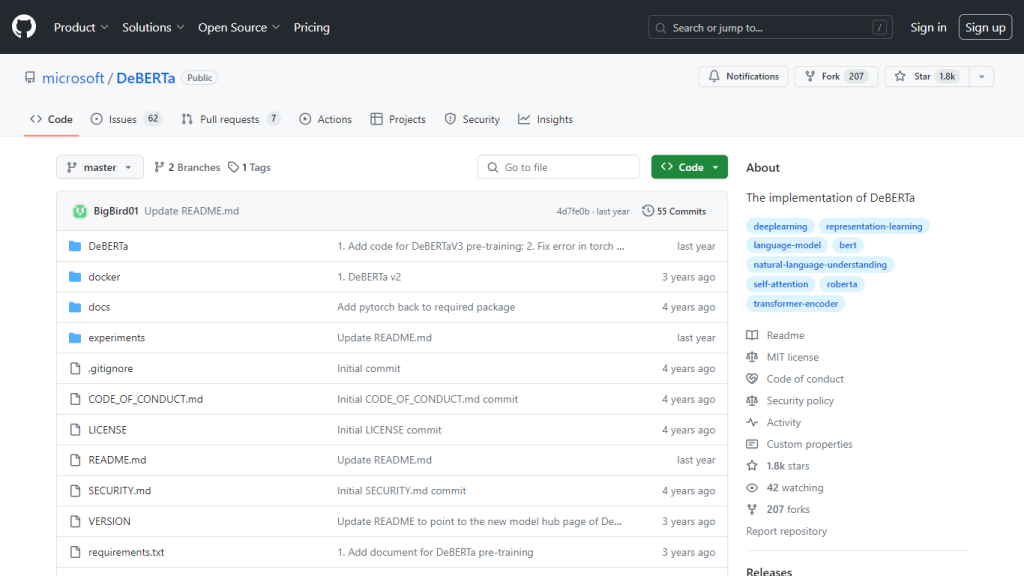

DeBERTa expands on the strong and popular BERT model but distinguishes itself in architectural design, more concretely in the design of attention layers. The project is hosted on Github as an open source project where the full implementation details, documentation, and source code are available for free. So, it enables developers and researchers across the world to contribute with their work toward its continual building and use within multiple applications in NLP.

Some Features of DeBERTa

Some of the features that make DeBERTa very prominent in the world of natural language processing:

-

Robust NLP Model:

DeBERTa draws its inspiration from the architecture of BERT and implements a disentangled attention mechanism to boost the processing of sequence information. -

Open Source:

The model is published on GitHub in a way such that everybody uses and contributes through it. -

Applicability:

The DeBERTa model has shown flexible and efficient results handling all kinds of NLP tasks. -

Microsoft-Backed:

Developed by Microsoft, hence a guarantee of reliability and quality in maintaining the code base. -

Community-Oriented:

The project enables community contributions hence collaboration and innovation.

DeBERTa has a lot to offer in terms of advanced sequence processing, ease of integration, and robust support from a vibrant community of developers.

Use-cases and Applications of DeBERTa

DeBERTa is very versatile and can be used across many NLP tasks and industries:

-

Text Classification:

Improving the accuracy of the categorization of text into pre-defined categories. -

Sentiment Analysis:

Recognize the feelings within textual data. -

Machine Translation:

Enhance the quality and fluency of translated text. -

Question Answering:

System having the ability to answer any questions related to textual data. -

Named Entity Recognition:

Recognizing and Classifying the entities in the text.

Incorporating solutions that apply DeBERTa, this can be operational within industries such as finance, health, e-commerce, and customer service to engage and scale their pace of data processing and analytical functions.

Installation Guide for DeBERTa

DeBERTa is much easier to install because it has documentation on how to install it, and there are many users in the community who can assist you. Here’s a step-by-step guide:

-

Make a GitHub Account:

Sign up on GitHub to access the repository hosting the DeBERTa model. -

Cloning the Repository:

Clone the DeBERTa repository on your local machine using Git commands. -

Installing Dependencies:

Depending on the OS and setup, initialize a virtual environment and install Iden in that. All of this will be found in the documentation. -

Running Examples:

Run the provided examples to observe basic usage and experiment with the model. -

Custom Use and Integration:

You can customize the code to your use case using the NLP add-ons, and DeBERTa can be incorporated into your work. What would be the best practice is to check in at the repository for updates and into community discussions.

The Mechanics of DeBERTa

DeBERTa has an awesome architecture, let me say. Here is a brief of its technical mechanism:

This uses a Disentangled attention mechanism, through which it can handle the boosting sequence separation and other types of applications of ‘Content and positional information’ processing. This enables DeBERTa to deal with longer sequences and hence enhance its performance on multiple NLP tasks.

In-built algorithms and models are discussed in the paper below:

-

BERT architecture:

The underlying architecture of DeBERTa. -

Disentangled Attention:

Allows the content and positional information processing in an independent manner to have better performances when processing sequences.

The workflow is from the tokenization of the input data, passing it through the disentangled attention layers, and output to use in classification, translation, question-answering, and so on.

DeBERTa: The Good and the Not So Much

Of course, like any other instrument, DeBERTa bears bright sides and potential drawbacks:

Pros:

- High-level sequence processing capabilities.

- Open-source and community-powered.

- Has support by Microsoft product models, and therefore, it is more reliable.

- It is flexible enough that it can be adopted for different kinds of NLP tasks.

Cons:

- High computational requirements for the model during training.

- May need strong domain expertise for fine-tuning and optimization for specific tasks.

Users say they experience strong DeBERTa performance and flexibility. Some note that significant computational resources are required.

Conclusion on DeBERTa

In a nutshell, DeBERTa is a powerful, versatile NLP model that builds on the power of BERT but inventively enhances it. Open-sourced, open for contribution, and endowed with huge community support, it is at par in every possible application, from text classification to machine translation.

This makes DeBERTa a strong option for anybody willing to harness uppermost NLP technology. Its future progress and updates can be monitored on the GitHub repository, where users will be up-to-date with the development in NLP.

DeBERTa FAQs

-

What is DeBERTa?

NLP enhancement on BERT model, with disentangled attention feature for better natural language processing. -

How can I contribute to DeBERTa?

You can contribute to this by joining GitHub and adding to the DeBERTa project repository under Microsoft’s organization repository on GitHub. -

What is DeBERTa?

In this, it stands for ‘Decoding-enhanced BERT with Disentangled Attention’. -

What is the license under which DeBERTa is released?

DeBERTa is released under the widely acknowledged as permissive license—the MIT license. -

Is DeBERTa definitely still being developed and improved?

Even though the central DeBERTa implementation is stable, the newest updates and news can be found solely in the direct GitHub repository, where the community contributes to improvement and enhancement.