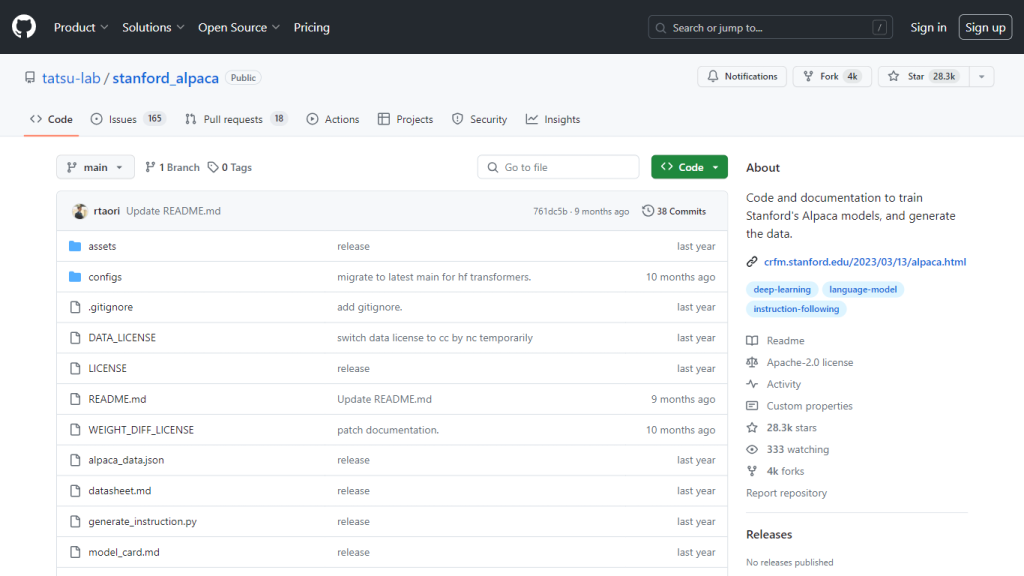

What is Stanford Alpaca?

Stanford Alpaca is a GitHub repository by tatsu-lab for providing training and fine-tuning resources regarding instruction-following AI models. Based on the Stanford model LLaMA, Alpaca is supposed to be a help to researchers by providing them with comprehensive documentation, code, and dataset requirements for developing specialized AI applications. The project remains in development for instruction-based models and will be used strictly for research purposes only. All datasets in this project are licensed under CC BY NC 4.0, and their usage can only be non-commercial.

Key Features & Benefits of Stanford Alpaca

Following are a few interesting features and tools included in Stanford Alpaca, which make it beneficial for researchers:

-

Dataset Availability:

It makes a dataset of 52,000 entries created while fine-tuning the model available. This dataset is highly valuable for the creation of solid, instruction-following skills. -

Data Generation Code:

There is code in the repository for generating any required training data. That makes it easier to train this model. -

Fine-Tuning Procedure:

It describes the fine-tuning procedure of Alpaca models. Step-by-step, it explains the whole process that one should follow. -

Model Weights Recovery:

It provides mechanisms necessary to recover the Alpaca-7B weights from the released weight difference and helps model replication or experimenting on models. -

Licensing Information:

The licensing information is straightforward; hence, the user will understand the restrictions of this project for only non-commercial research purposes.

Main benefits of using Stanford Alpaca: the process of research will be much easier, and also it will be easily possible to adapt this model for your purposes; finally, contributing to open and collaborative work on creating AI.

Use Cases and Applications of Stanford Alpaca

Each case where instruction-following AI models are useful can be potentially served by Stanford Alpaca. Examples include:

- Development of educational tool support, which requires a deep understanding of user instructions and responses.

- The construction of advanced customer service bots which will be able to process complex inquiries and provide detailed, precise answers.

- Improvement in functionality within virtual assistant applications with regard to understanding and performing user-dictated commands.

Sectors where Alpaca models will find most applications are the fields of education, customer relations, and software development. Specific case studies are not available, but the option for success with those fields is quite great.

How to Use Stanford Alpaca

Here are some general steps on how to use Stanford Alpaca:

-

Access Repository:

This will involve cloning the repository from GitHub onto your local machine for access to the dataset, code, and documentation. -

Generating Data:

The code for generating data is provided, with which you can develop your needed datasets to train your model. -

Fine-Tune the Model:

Follow the fine-tuning procedure with care as you adapt the Alpaca model to your needs. -

Restore Model Weights:

If necessary, the Alpaca-7B model weights can be recovered by utilizing the weight difference file.

Best practices include reading through the documentation, respecting any licensing constraints, and making any modifications available to this repository in order to give back to the research community.

How Stanford Alpaca Works

Stanford Alpaca is implemented based on a Stanford model called LLaMA. It fine-tunes its action of following instructions in texts. Much key technology is introduced in the basic model, mainly comprising:

-

Data Generation:

Code provided in the repository helps generate the dataset that will be needed in model training. -

Fine-Tuning:

Step-by-step procedures in this regard help researchers fine-tune the Alpaca model by modifying its parameters to offer better responses to the calls of particular instructions. -

Model Weights:

Recovery mechanisms are in place for recovering the model weights, hence consistency and reproducibility of research.

Everything generally goes through data generation, model training, and fine-tuning in the workflow to produce a robust, instruction-following AI model that is tuned to meet the needs of interested users.

Pros and Cons of Stanford Alpaca

Like all projects, here are some pros and cons associated with the Stanford Alpaca:

Pros:

- Full, in-depth resources for model training and fine-tuning are available.

- Open source and community-driven, hence inviting involvement from the community.

- Licensing information is clear to ensure that it is used in an ethical framework.

Cons:

- Licensed strictly for non-commercial use only; hence, it will restrict its use for applications where that is not so.

- Still a work in development, so there may be bugs and unfinished functionality.

- User feedback generally points to the utility of this project in research situations, although some users note limitations imposed by the non-commercial license.

Stanford Alpaca Conclusion

In a nutshell, Stanford Alpaca provides the best sets of tools and resources for developing an instruction-following AI model that is of immense value to researchers and academics. Though it has its own set of limitations, such as non-commercial licensing, its open-source nature along with comprehensive documentation makes it a great ideal choice for those keen on contributions as well as the benefits that one can get out of the research community. Future updates and revisions will likely extend its capabilities to make up for limitations in its current form.

Stanford Alpaca FAQs

What is Stanford Alpaca?

Stanford Alpaca is working on building and releasing an instruction-following LLaMA for research.

What is hosted in the Stanford Alpaca repository?

The repository contains a dataset, data generation code, the fine-tuning procedures, and a weight difference file for Alpaca-7B.

Is training code provided by Stanford Alpaca?

Yes, the repository contains code for generating the data as well as the fine-tuning process.

What are the usage restrictions for Stanford Alpaca?

Stanford Alpaca is licensed under CC BY NC 4.0 for non-commercial research use only.

Are the weights of the Alpaca-7B model released?

The intention to release has been expressed as of the knowledge cutoff date, pending permissions from the LLaMA creators.