What is XLM?

XLM, or Cross-lingual Language Model, is an original PyTorch implementation designed for pretraining language models that operate across multiple languages. It serves as a foundational tool for creating multilingual Natural Language Processing (NLP) systems by employing techniques such as Masked Language Modeling (MLM) and Translation Language Modeling (TLM). These methods enable the learning of language representations that generalize well across various linguistic tasks, allowing for better understanding and processing of text in different languages.

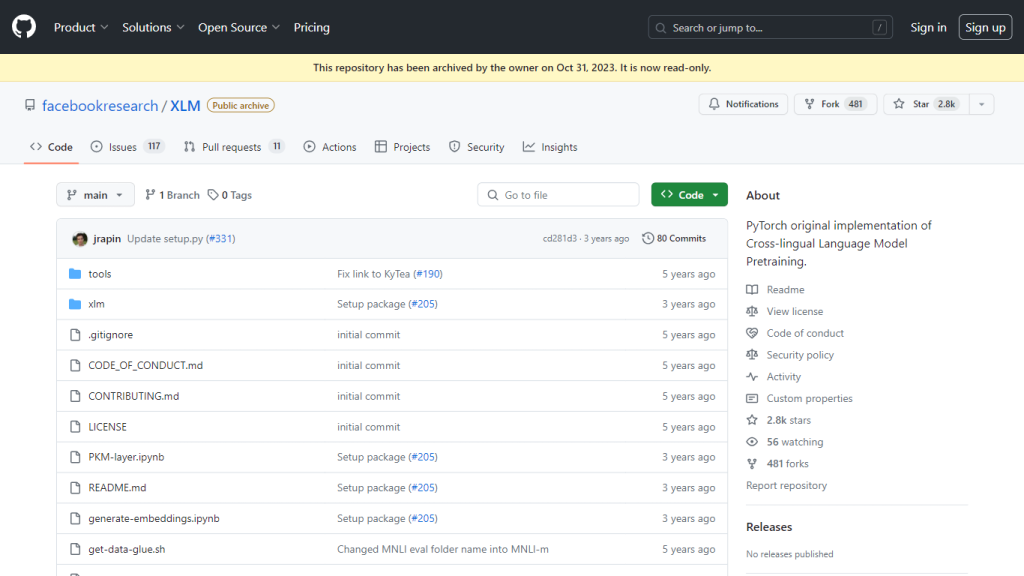

Developed to harness the benefits of transfer learning in the context of NLP, XLM helps in leveraging data from high-resource languages to improve language models for languages with limited training data. Although the repository was archived on October 31, 2023, and is now in a read-only state, it remains a vital resource for historical reference and study in cross-lingual language model pretraining.

XLM’s Key Features & Benefits

XLM offers a range of features that make it a powerful tool for multilingual NLP:

- Cross-lingual Model Pretraining: Implements pretraining methods like Masked Language Model and Translation Language Model to enhance multilingual NLP performance.

- Machine Translation: Provides tools and scripts for training both Supervised and Unsupervised Machine Translation models.

- Fine-tuning Capabilities: Supports fine-tuning on benchmarks such as GLUE and XNLI for improved model performance.

- Multi-GPU and Multi-node Training: Facilitates scaling the training process across multiple GPUs and nodes for efficient computation.

- Additional Resources: Includes utilities for language pretraining like fastBPE for Byte Pair Encoding (BPE) codes and the Moses tokenizer.

Using XLM, researchers and developers can significantly boost the capabilities of their multilingual NLP models, making it easier to handle languages with limited resources by leveraging data from more resource-rich languages.

XLM’s Use Cases and Applications

XLM can be applied in various scenarios across different industries:

- Machine Translation: Develop and improve machine translation systems that work across multiple languages.

- Cross-lingual Information Retrieval: Enhance search engines and information retrieval systems to understand and process queries in different languages.

- Multilingual Sentiment Analysis: Analyze sentiments across various languages using a unified model.

- Global Customer Support: Create language models that can assist in offering customer support in multiple languages, improving service for non-English speaking users.

Industries such as technology, e-commerce, customer service, and media can greatly benefit from implementing XLM in their multilingual NLP solutions. For example, a tech company might use XLM to develop an advanced translation tool, while a customer service firm could deploy it to improve multilingual support chatbots.

How to Use XLM

Using XLM involves several steps:

- Clone the Repository: Although the repository is archived, you can still clone it for reference purposes.

- Install Dependencies: Ensure you have PyTorch and other required libraries installed.

- Pretrain the Model: Use the provided scripts to pretrain your language model using MLM and TLM techniques.

- Fine-tune the Model: Apply fine-tuning on specific benchmarks like GLUE or XNLI to enhance model performance for your use case.

- Deploy the Model: Implement the trained model in your application, whether it is a translation tool, sentiment analysis system, or any other NLP solution.

For best practices, ensure you have a robust computational setup, particularly if you are leveraging multi-GPU or multi-node training capabilities.

How XLM Works

XLM leverages advanced techniques for cross-lingual language modeling:

- Masked Language Modeling (MLM): This involves masking certain tokens in a sentence and training the model to predict them, enhancing understanding of context and semantics across languages.

- Translation Language Modeling (TLM): Uses parallel sentences in different languages to train the model, helping it learn translation mappings and improve cross-lingual capabilities.

The workflow typically involves pretraining a language model on a large corpus of multilingual text, followed by fine-tuning on specific tasks or benchmarks. This two-step process enables the model to learn general language representations and then specialize in particular tasks.

XLM Pros and Cons

Like any technology, XLM has its advantages and potential limitations:

Pros:

- Comprehensive Pretraining: Effective for learning language representations across multiple languages.

- Scalability: Supports multi-GPU and multi-node training for efficient computation.

- Versatility: Applicable to various NLP tasks, including translation, sentiment analysis, and information retrieval.

Cons:

- Archived Repository: As of October 31, 2023, the repository is in a read-only state, limiting updates and contributions.

- Resource Intensive: Requires substantial computational resources for training large models.

Overall, user feedback has been positive, particularly regarding the model’s performance and scalability, though some users note the challenges associated with the archived status of the repository.

XLM Pricing

XLM is available under a freemium model, allowing users to access the repository and utilize its resources without cost. However, for extensive use or additional support, users may need to consider other solutions or services that complement XLM’s capabilities.

Conclusion about XLM

In summary, XLM offers a powerful framework for cross-lingual language modeling, making it an invaluable tool for multilingual NLP applications. Despite its archived status, it remains a crucial resource for researchers and developers aiming to enhance language models for diverse linguistic tasks. Future developments in this area may build upon the foundation laid by XLM, further advancing the capabilities of multilingual NLP systems.

XLM FAQs

- What is the main purpose of the XLM repository? The primary goal is to provide a PyTorch implementation for Cross-lingual Language Model Pretraining, facilitating the training of language models that understand multiple languages.

- Can I still use the XLM repository for my projects? Yes, you can reference and study the code, although the repository is in a read-only state and no longer accepts contributions.

- What techniques are included in XLM for language model pretraining? XLM includes Monolingual Language Model Pretraining (BERT), Cross-lingual Language Model Pretraining (XLM), and applications for Supervised and Unsupervised Machine Translation.

- Does XLM support multi-GPU training? Yes, it supports multi-GPU and multi-node training for efficient computation of complex models.

- What benchmarks can XLM’s pre-trained models be fine-tuned on? XLM’s pre-trained models can be fine-tuned on benchmarks such as GLUE and XNLI for enhanced performance.