What is LiteLLM?

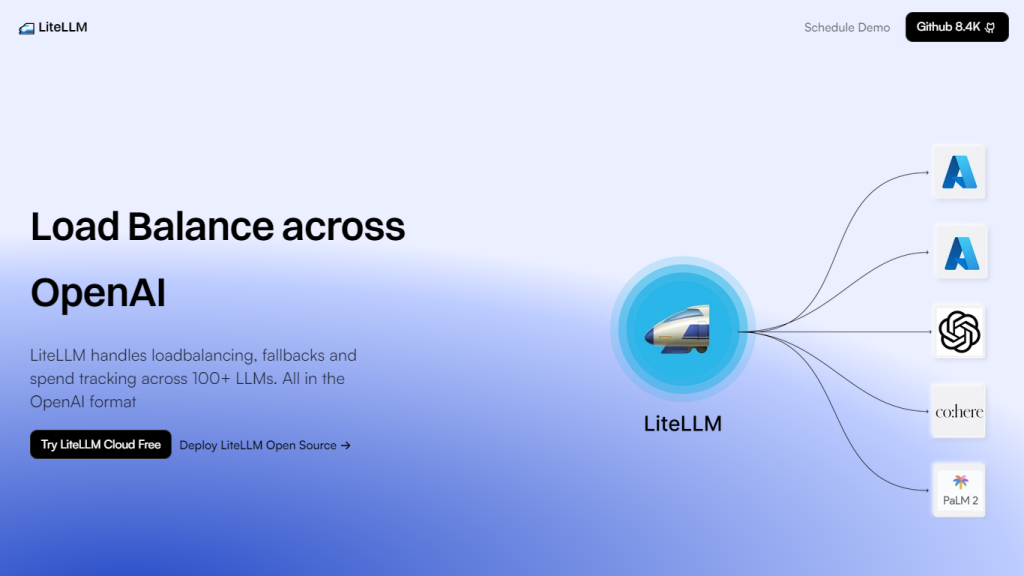

LiteLLM is a revolutionary platform for efficient large language model (LLM) management—to be used by businesses and developers alike. It simplifies complicated tasks around LLMs through load balancing, fallback solutions, and spend tracking in more than 100 different models, all in standard OpenAI format. This allows the smooth, reliable integration and execution of many large language models in a way that is cost-effective.

Developed with a large community in its support, LiteLLM gained huge attention; obviously, on its GitHub repository, there are 8.4k stars, more than 40,000 docker pulls, and more than 20 million requests served with a 99% uptime. The platform remains under continuous development backstopped by over 150 contributors who ensure the satisfaction of the diversified demands made by its users.

LiteLLM’s Key Features & Benefits

LiteLLM is a one-stop solution for a great many features required by an LLM Manager: load balancing between Azure, Vertex AI, Bedrock, and other platforms; fallback solutions to keep services going; spend tracking and management of expenditures on LLM operations to help maintain budgets; and, most importantly, compatibility with standard OpenAI format.

Community Support: It is supported by a huge community with more than 150 contributors. There are also numerous resources and documentation available.

These qualities make LiteLLM a very handy package when it comes to enhancing efficiency, reliability, and cutting costs in working with large language models.

Use Cases and Applications of LiteLLM

Among other applications, LiteLLM can be pushed into service as enumerated below:

- It easily embeds and adds completions to models like GPT-3.5 Turbo and Cohere, with the minimum number of implementation steps and settings for environment variables, into your projects. LiteLLM helps drive continuous research in AI by enabling seamless coding workflows through its built-in verifiable GitHub integration, enabling anyone to collaborate with ease and even control versions.

- Data Science: Ease the process of accessing and using the completion and embedding functions by easily installing LiteLLM via pip and ensuring a one-time setup for API keys to boost productivity.

- LiteLLM can be used by software developers, AI researchers, and data scientists who aim to make their workflow more smooth and scale their projects with the power of language models.

How to Use LiteLLM

LiteLLM is simple to get started with:

- Now is your opportunity to have a free LiteLLM cloud service trial or deploy their open-source solution from Github.

- Have it readily set up with already prepared implementation code to import completions and set environment variables for the API keys after an easy pip installation of LiteLLM.

- Prepare fast with the GitHub integrations of the LiteLLM tool and improve the coding processes and version control.

- Spend tracking can be done in the frequency you deem convenient, and it has features of load balancing that ensure the task is distributed effectively in the different platforms according to best practices.

How LiteLLM Works

LiteLLM works with the help of advanced algorithms and models for the effective management of large language models. Load balancing across diverse compute resources enables this platform to deliver its tasks optimally and reliably. Fallback solutions are available for the continuous running of the service, while spend tracking can assist users to control their budgets from within. OpenAI-based format support provided for ease in integration and running of multiple LLMs.

Pros and Cons of LiteLLM

Like any other technology, LiteLLM has its pros and potential cons:

Pros

- Effective load balancing and fallback solutions

- Comprehensive spend tracking

- Strong community support and extensive documentation

- Support for the official OpenAI standard format

- Open-source and free trial implementations are also available

Cons

- Some people might require technical knowledge to set up and integrate this solution

- Rely on the community to provide updates and some fixes

User feedback tends to point out the platform’s efficiency and reliability, with comments mentioning the initial learning curve related to setting it up.

Conclusion Relating to LiteLLM

Summary: In a nutshell, LiteLLM emerges as the most powerful platform to manage large language models, equipped with functionalities for load balancing, fallback solutions, and spend tracking. It further improves in relation to strong community support and compatibility with OpenAI format. Overall, LiteLLM is an assurance for any software developer, AI researcher, or data scientist to assure great workflow effectiveness and enhanced project efficiency. From here on out, ongoing contributions from the community will keep LiteLLM at the cutting edge of LLM management.

LiteLLM FAQ

-

What is it that LiteLLM does?

Load balancing, fallback solutions, and spend tracking on over 100 large language models. -

Does LiteLLM have a free trial or open-source solution?

LiteLLM offers a free cloud service trial, as well as the ability to deploy a free trial Open Source solution. -

How reliable is LiteLLM in terms of uptime?

It is very reliable, with 99% uptime guarantees from providers to ensure services are available. -

How can I get started with LiteLLM, or where do I learn more about its community?

You can request a demo from the LiteLLM team or download it from GitHub. There are over 8.4k stars, so this is a heavily community-driven project. -

How many requests has LiteLLM served?

LiteLLM has so far served more than 20 million requests, which outlines its capacity and robustness in LLM operations.